Verification Is Possible: Checking Compliance With an Autonomous Weapon Ban

Published by The Lawfare Institute

in Cooperation With

Since about 2000, there has been an increase in the use of armed uncrewed vehicles, especially in the air. Around 40 countries possess armed uncrewed aerial vehicles (UAVs), and around 30 produce or develop them on their own. Some types have autonomous capabilities—for following a certain path, for takeoff, and for landing. But the weapons themselves are controlled remotely by human operators who, with the help of video and other sensor information, assess the scene and decide which targets are to be attacked. However, important countries are intensely researching and developing for the next technological step: letting the computer on board evaluate the situation, select targets, and engage them without human input or control. Such a setup would constitute an autonomous weapon system (AWS).

There are several military motives for extending the autonomy of machines to the attack function: The strongest one probably is the markedly reduced reaction time that machines have. Seconds of communication delay, and maybe minutes of human pondering, would no longer be needed: An AWS could react within seconds or fractions of a second. Communication and human reaction times do not matter in strongly asymmetric situations, but in armed conflict against a roughly equally capable enemy, they can mean the loss of the target, or even the destruction of one’s systems, before their weapons could be triggered. However, AWSs raise major problems: There is the risk of violations of international humanitarian law (IHL); there is the fundamental problem of whether a machine should be allowed to kill a human; and there are risks of escalation between two forces equipped with AWSs, which would destabilize the military situation in particular in a heavy crisis. Such a decrease in international security could also reduce the national security of the nations involved.

To prevent these dangers, members of academia and many nongovernmental organizations (NGOs) are demanding a legally binding international prohibition of AWSs, and since 2014, states have regularly held expert discussions about the regulation of lethal autonomous weapon systems (the notion used in the framework of the Convention on Certain Conventional Weapons, or CCW). While more than 100 states have called for a legally binding instrument on AWSs, 10 are opposed. Due to the consensus rule followed in the CCW, a mandate for such negotiations could not be decided, unfortunately. The only result that was achieved are guidelines from 2019, nonobligatory recommendations for how states should use AWSs. But these guidelines will not limit the dangers from such weapons—as soon as deployment begins in one important state, an escalating arms race, a race to the bottom, is to be expected in which fear of faster advance by a potential enemy may result in deployment of systems that will have a high probability of violating IHL. Preventing such violations as well as military destabilization requires a legally binding prohibition of AWSs; this could be implemented by a positive rule that requires each attack in armed conflict to be controlled by a responsible and accountable human.

Prerequisite for Arms Control: Verification

Arms control treaties—mutual limitations of armaments and forces—need to be backed by mechanisms to verify that the treaty partners comply with the rules. Verification is crucial because if one partner secretly retains weapons, it could fatally attack another more honest partner. Fearing this, even originally honest parties would be motivated to covertly keep weapons. Such scenarios can be avoided if each party can convince itself reliably that the others are keeping their obligations.

In past internal discussions of whether a state should enter an arms control agreement, verification ranged high on the agenda; sometimes the alleged nonverifiability was used as a pretext to not openly argue against arms control. The issue is particularly important in the U.S., where ratification of international treaties requires a two-thirds vote in the Senate. An important case is the Comprehensive Nuclear Test Ban Treaty (CTBT, not yet ratified); in the Senate debate, verifiability was one major point of contention. Following the 1999 rejection of the CTBT, the U.S. government commissioned the U.S. National Academies to study the technical issues; one report was published in 2002, a more comprehensive one in 2012. But still the U.S. has not ratified the treaty.

It is striking that, unlike earlier arms control discussions, in the AWS ban debate the verifiability issue does not yet play a prominent role. Some observers mention it as a problem. The United States’s National Security Commission on Artificial Intelligence even states that “arms control of AI-enabled weapon systems is currently technically unverifiable”; however, the commission proposes to “pursue technical means to verify compliance with future arms control agreements pertaining to AI-enabled weapon systems.”

The problem is that the very same weapons or carriers could attack either under human remote control or autonomously under computer control. From an international security as well as verification perspective, a complete ban of armed uncrewed vehicles would be best. Then the capability to carry weapons would show up in externally observable features, such as bomb bays, hard points under the fuselage or the wings, or machine guns. Their absence could be verified during on-site inspections plus maneuver observations as under the (now defunct) Treaty on Conventional Armed Forces in Europe (CFE Treaty) and the Vienna Document of the Organisation for Security and Co-operation in Europe (OSCE, which is still valid).

However, this train has left the station—too many countries own armed UAVs already and will not give them up any time soon. This is different with AWSs: While there are systems for point defense, AWSs that would search in some region do not yet exist, although early models may have been used in 2020 in Libya and in 2023 in Ukraine. Thus armed forces would not have to forego capabilities that they feel strongly dependent on, and a preventive ban is still possible. However, this window of opportunity may close in a few years. Such a ban would prohibit AWSs (with exceptions for close-in defense systems with an automatic mode such as the U.S. Phalanx), while remotely controlled weapon systems would still be allowed. The same physical systems could work in both roles; the only difference would consist in the control software: Is human remote command needed for an attack, or can the computer on board “make” the decision? For verification: How could one find out if a weapon system has an autonomous capability? In principle, one could inspect the software—but this certainly would be seen by armed forces as much too intrusive. And even if an inspection of the control program were allowed and gave a positive result, a modified version containing autonomous attack could be uploaded quickly afterward. Thus, advance confirmation that an uncrewed weapon system seen during an inspection could not attack autonomously is virtually impossible.

Solution: Check Human Control After the Fact

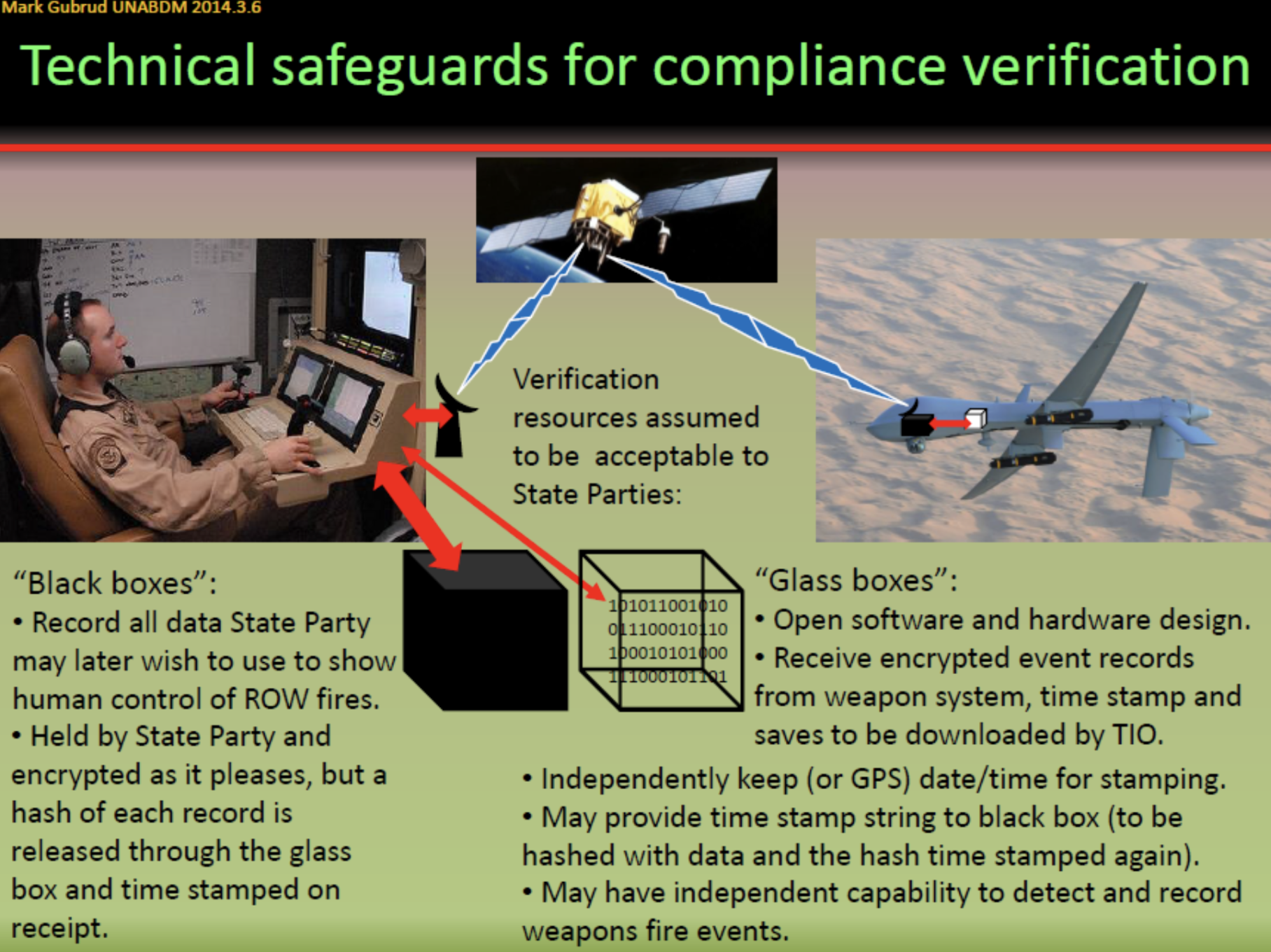

As Mark Gubrud and I pointed out in 2013, there is a way out: checking after the fact whether attacks were carried out under human control. For this, an international AWS ban could stipulate that in armed conflict, every single attack by an uncrewed armed vehicle or other uncrewed weapon system—which I refer to as remotely operated weapons (ROWs) from now on—be operated under meaningful control by a responsible and accountable human (in a differentiated way). States could create an international treaty implementation organization (TIO) for verifying compliance. Real-time monitoring of events on the battlefield would be too intrusive, could impede military action, and would open principal pathways for enemies to gain combat-relevant information. But the warring parties could internally record all relevant information around each attack securely in “black boxes” and make it available to the TIO later when there would no longer be a direct influence on the hostilities. The digital information stored would include video and other data that was presented to the operator; a video view of the console with the operator’s hands on the controls; the commands received and issued, from immediately prior to selection of the target until shortly after the engagement command; and the identities of the accountable operator and the accountable commander. Further information would be stored in a black box on the ROW in parallel: events such as the launch of a missile, firing a gun or other engagement action of a weapon, together with the time. These two primary records of the engagement would be held by the respective state parties.

A method is needed to make sure that the information handed to the TIO later is correct and complete. Computing a cryptographic hash function over the respective information could accomplish this. Such an output hash code is much shorter than the original message but would be different if only one single bit were changed, while reconstructing the original information from it is impossible. The hashes from the control station and from the ROW, together with the respective time stamps, would constitute unique use of force identifiers (UFI) to be stored in “glass boxes.” Immediately after the engagement, the UFI from the console glass box would be transmitted via the communication link and also stored in the ROW glass box. The control-station time stamp has to be prior to the ROW one. Its storage in the ROW glass box would be proof that a communication link between console and ROW existed at the time of the engagement. The stored hash codes would be downloaded from the console and the ROW periodically during on-site inspections by the TIO. For a ROW that self-destructs in attack, a somewhat different scheme would be used. Its pre-attack information would be sent to the control station immediately and stored there, where both hash codes would be computed and stored.

Figure 1. Scheme of the verification method: All information around an attack is recorded in “black boxes” at the control station as well as on the remotely operated weapon. This data remains under national control. Hash codes computed over the respective information are computed and stored in “glass boxes.” These codes are downloaded by the treaty implementation organization at on-site inspections or via a secure communication channel. Later, the TIO can request the original data and check its authenticity using the hash codes. (Figure by Mark Gubrud, used with permission. Originally shown 2014 in a presentation to the UN Advisory Board on Disarmament Matters.)

For more frequent access, the codes may be stored on a national server where the individual control stations would have transferred their glass-box data, to be downloaded remotely by the TIO via a secure channel. The TIO would keep the UFI database secure, but even if it were compromised this would not provide useful intelligence.

At an appropriate time after an event, the TIO would request the original data, when it is no longer relevant for the armed conflict. In the interest of demonstrating its compliance, the state party would hand over the data, and the TIO would check whether, under the hash function, this data yields the same hash code that was stored earlier. The time delay could be months, though likely not much longer than one year. The events to be investigated could be selected randomly—or on challenge, if there are doubts. Indications of potential noncompliance could include seemingly too-rapid reaction on the battlefield or, in case of a swarm attack, a number of independent sub-swarms that would require unrealistically many operators to control them.

Acceptability and Next Steps

From a technical perspective, this verification method does not create a big hurdle. Armed forces record their remote control attacks as a matter of routine. The only things that would have to be added are the algorithms producing the hash codes and the glass boxes for storing the results with times, together with means for readout of UFI lists and ROW hash codes during TIO inspections, or for secure remote download. All this can use software that exists and is in widespread use.

The same holds for the financial aspect. The additional cost would be negligible compared with the cost of developing and maintaining ROWs.

The main problem is the political will. This requires that states understand that an AWS prohibition is in their own enlightened national-security interest.

Compliance would be strengthened and the proposed verification scheme made easier by several measures:

- Information should be exchanged about remotely controlled uncrewed weapon systems, similar to what had been agreed upon for major conventional weapon systems in the CFE Treaty and the Vienna Document of the OSCE. Here photographs are to be provided for the existing types; for battle tanks and heavy armament combat vehicles, the main-gun caliber and unladen weight are to be given. For an AWS prohibition, it would be useful to add the size of the uncrewed, remotely controlled weapon systems.

- There should be an obligation to accept maneuver observations and inspections of bases. Optimally such activities would be carried out by the TIO, following the example of the Organization for the Prohibition of Chemical Weapons (OPCW). The alternative, having states observe and inspect each other, organized via the TIO, following the model of the OSCE Forum for Security Co-operation, would be less appropriate for an international treaty.

- To find indications of noncompliance, information from witnesses could be collected and the internet could be searched.

An unknown is whether states will decide in the future to make available original records after a period of time in order to demonstrate their compliance to the world or their own publics.

The general idea of providing secure records of attacks by ROWs could also be useful before legally binding, treaty-based arms control can become possible, namely as a part of confidence- and security-building measures. Here a trusted intermediary would be needed that would hold the hash codes and could confirm the authenticity of recordings when asked.

Conclusion

While advance verification that an uncrewed armed vehicle or weapon system cannot attack autonomously is practically excluded, compliance with an AWS ban—expressed in an obligation that each attack be done under meaningful control by a responsible and accountable human—can be checked with an adequate degree of certainty after the fact by the scheme described: All relevant data and actions around an attack by a remotely operated weapon would be recorded securely by the owning side, which would keep the data in its custody. Only a hash code of this data would be transferred to the TIO from which the original information cannot be reconstructed. At some later time, the TIO would ask for the original data and examine it, applying the same hash function to it; the authenticity would be proved if the new hash code is identical to the one transferred shortly after the attack.

The general concept outlined here needs further research and, in particular, development and testing. Glass boxes, algorithms, and communication to the TIO can be developed and tested in simulations. Rules for weapons that self-destruct on attack need to be devised, and inclusion of automatic close-in defense systems should be investigated. The possibility of cheating, and countermeasures against it, need to be studied systematically. Computer scientists and engineers should take up such tasks. Later hardware tests and evaluations require cooperation by states; these could follow the examples of the Trilateral Initiative, the United Kingdom-Norway Initiative, the Quad Nuclear Verification Partnership, and the International Partnership for Nuclear Disarmament Verification (IPNDV).

If states would understand that the AWS ban fosters their (enlightened) national security, they would be interested in actively demonstrating compliance. The main task ahead is strengthening this insight in the relevant countries, in particular, those that still are opposed to a ban. Disseminating the information that verification is possible could be a part of convincing decision-makers and the public.