Avoiding a Military-AI Complex

Published by The Lawfare Institute

in Cooperation With

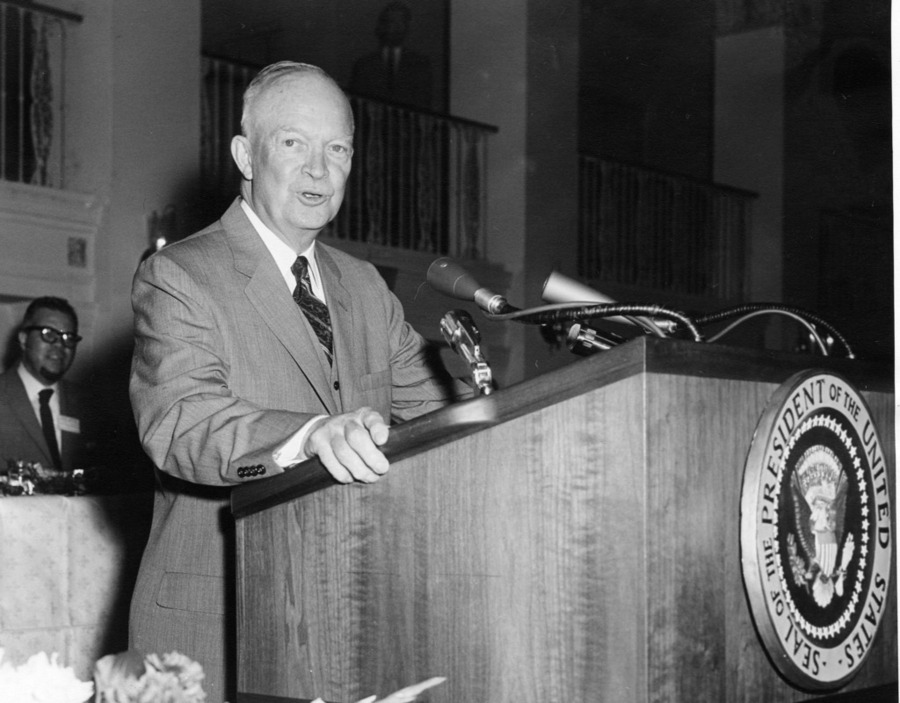

Ike warned this would happen. He foresaw the “potential for the disastrous rise of misplaced power” as a result of private industry capturing an “immense military establishment.” What he couldn’t have known was that the military-industrial complex would transform into the military-artificial intelligence (AI) complex. The recently promulgated National Security Memorandum on AI (NSM), however, will establish just that if left in place or its underlying goals and actions shape future executive action.

Whether President-elect Trump follows through on the NSM in his second term is an open question. On the one hand, Trump has previously expressed a desire to rescind the Biden executive order that called for the NSM. On the other hand, Trump has repeatedly emphasized a desire for the United States to lead in AI innovation. Implementation of the NSM would further that goal. So while the NSM may not persist in form under Trump, it seems likely to carry on in substance. That said, if the second Trump administration wants to avoid concentrating power in the hands of a few AI actors, then it may want to reevaluate the decision to double down” on an AI industry marked by a high degree of concentration. A more sustainable, beneficial approach would instead focus the nation’s AI policy on peaceful applications and encourage more competition in the industry.

The Biden administration issued the NSM pursuant to its October 2023 executive order on AI. The NSM sets forth three objectives. First, ensure the U.S. leads in developing “safe, secure, and trustworthy AI.” Realization of this end involves the federal government actively aiding private actors in acquiring the resources and talent necessary to create frontier AI models. Second, incorporate those AI advances into the work of the government itself, with a specific focus on enhancing national security through strategic adoption of AI. Third, maintain the federal government’s ongoing attempts to create international governance frameworks around the development and use of AI.

A wide range of agency actions outlined in the NSM give a sense of how the Biden administration planned to achieve those goals and, perhaps, what administrative actions we may see in the lame-duck period. For example, the Department of State, Department of Defense, and Department of Homeland Security are tasked with exploring ways for the federal government to recruit and retain AI experts from around the world. The Department of Defense and Department of Energy are charged with “design[ing] and build[ing] facilities capable of harnessing frontier AI for relevant scientific research domains and intelligence analysis.” The NSM also calls on a number of actors, such as the Department of Defense and Department of Energy, to make substantial investments in AI technologies. In short, the NSM is the “nation’s first-ever strategy for harnessing the power and managing the risks of AI to advance our national security.”

The NSM makes clear that the federal government plans not only to invest in AI but also to deploy it as a means of achieving national security aims. Here’s a sample of the Biden administration’s thinking:

If the United States Government does not act with responsible speed and in partnership with industry, civil society, and academia to make use of AI capabilities in service of the national security mission—and to ensure the safety, security, and trustworthiness of American AI innovation writ large—it risks losing ground to strategic competitors.

The Biden administration caveats these lofty goals with a disclaimer, which reads: “[T]he United States Government must also protect human rights, civil rights, civil liberties, privacy, and safety, and lay the groundwork for a stable and responsible international AI governance landscape.” It’s unclear how the government will establish such protections. National Security Adviser Jake Sullivan admitted that “innovation has never been predictable, but the degree of uncertainty in AI development is unprecedented.” The high degree of uncertainty makes it difficult to ensure civil liberties and human rights in the unfettered pursuit of AI technology. Even today’s simple AI systems have already facilitated biased decision-making.

What’s not subject to interpretation is that frontier AI technology is now at the core of the nation’s defense policy. Sullivan confirmed as much in remarks on the NSM. He said, “In this age, in this world, the application of artificial intelligence will define the future, and our country must once again develop new capabilities, new tools, and ... new doctrine.”

A handful of actors stand to dominate this new defense priority. Anthropic, OpenAI, Google DeepMind, and a small group of other big tech companies operate at the frontier of AI development. Their position is entrenched because of a high barrier to entry. The resources required to train leading models are exorbitant and growing—perhaps by a margin of 2.4 times per year. Expedited procurement procedures called for by the NSM will allow these companies to exploit the fact that they alone have developed the sorts of foundational models that the government may be seeking. Other, smaller labs are simply too far behind in the development process to meaningfully compete with these AI giants in the short to medium term. By way of example, it cost Google upward of $100 million to train its Gemini model. Upstarts do not have that kind of money. It’s hard to see how this dynamic won’t result in the sort of misplaced power unaccountable to voters that Eisenhower feared.

Sullivan touted Eisenhower presiding over an era that required “new strategies, new thinking, and new leadership.” Despite Sullivan mentioning Eisenhower in his remarks, he and other members of the Biden administration failed to heed Ike’s advice and to mirror his willingness to break with conventional thinking. A review of the lengthy NSM suggests that the authors attempted to gloss over the fact that hasty, unchecked investment in weapons of unknown, yet massive capabilities has been tried before. In celebrating the fact that the United States’s national security institutions “have historically triumphed during eras of technological transition,” the NSM omits mention of the wasteful, dangerous period of nuclear weapon development. Instead, the memo paints a rosier picture: “To meet changing times, [national security institutions] developed new capabilities, from submarines and aircraft to space systems and cyber tools.” The only explicit mention of nuclear anything is with respect to nuclear propulsion.

The NSM fails to confront the dozens of disclosed “near misses” of all-out nuclear war during the Cold War, like the Cuban missile crisis. In the same way that the U.S. relentlessly added to its nuclear stockpile for much of the Cold War, the NSM does not consider a plan B. The only path forward is armament with occasional considerations of civil rights, human rights, and civil liberties. Rather than thoroughly analyze when and to what extent the intelligence community should acquire and use AI, the NSM instructs the intelligence community to consider the constitutionality of different use cases. Rather than evaluate means to slow the AI arms race, it calls on the federal government to cooperate with allies in the co-development and co-deployment of AI capabilities. And, rather than facilitate an iterative, reversible adoption of AI by different agencies, it sets a default of ever-greater integration of AI into agency tasks and operation. The cumulative result is a government that is especially reliant on AI working as intended, continuing to improve, and improving at a rate greater than that achieved by adversaries. This sort of bet on a resource-intensive and poorly understood technology exposes the American people to tremendous risk in the event of a technical or financial collapse of the AI ecosystem.

President Kennedy, in the aftermath of the Cuban missile crisis, realized that the United States’s “doubling down” on nuclear weapons amounted to a profound waste. While he noted that “the expenditure of billions of dollars every year on weapons acquired for the purpose of making sure we never need to use them is essential to keeping the peace,” Kennedy also lamented that the “acquisition of such idle stockpiles—which can only destroy and never create—is not the only, much less the most efficient, means of assuring peace.” This realization led to action. Months after the Cuban missile crisis, Kennedy announced the Nuclear Test Ban Treaty. He turned one of the most dangerous events in American history into an opportunity to mutually lower the odds of destruction and reduce wasteful spending.

Now, as was the case then, militarization of advanced technology with an eye toward war has become the default strategy. It’s not the best one, though. The pursuit of peace may be the more difficult route because “frequently the words of the pursuer fall on deaf ears.” However, “we have no more urgent task."

The next administration need not and should not abandon efforts to accelerate AI. AI directed toward the public good, such as disease detection or hastening the transition to autonomous vehicles (AVs), can produce immense human flourishing. AI directed predominantly toward national security will delay the realization of those ends. It’s true that some commercial and public applications of AI may occur from exploring the use of AI in a national security setting. It’s also true that those applications are even more likely if they become the primary focus of AI labs and national policy. More than 42,000 people died in motor vehicle accidents in 2022. AI has enabled AV companies to drastically improve the sophistication and reliability of their vehicles. A national focus on accelerating AV progress through AI resources could save thousands of lives in the relatively short term. Similar stories can be told with respect to AI-informed cancer treatments and improved predictions of extreme weather. If the government oriented its AI strategies around these applications, the improvement in average well-being could be significant.

An alternative set of principles grounded in the lessons of the past should guide the use of AI for national security. First, government adoption of AI should facilitate a competitive AI market. A broader range of AI labs competing for government contracts will give the government and, by extension, the people greater choice of models based on factors such as civil liberties, human rights, and AI safety. This would reduce the odds of yet another defense sector being dominated by a handful of companies that may not be as stable as hoped. Excessive reliance on Boeing, for example, has placed the nation in an unfortunate position and one it could have avoided if this principle had been adopted sooner. The Congressional Research Service recently published a report analyzing the threats to national security posed by Boeing’s ongoing struggles. Boeing accounts for 3.3 percent of U.S. defense contracts. It is one of just three suppliers competing to launch U.S. national security satellites. It ranks as the fourth-largest Defense Department contractor. Congress now has to weigh some serious options to stave off the worst outcomes should Boeing go bankrupt. One option involves Congress overseeing Boeing’s defense program. Another would task Congress with monitoring Boeing’s finances and operations. These and other options all could have been mitigated by insisting on a principle of competition.

Second, deescalation rather than peace through AI acceleration should guide relations with China and other adversaries. A hawkish mentality may score political points, but we should strive to rise above petty politics when thinking about the best use cases of this incredible technology. The end of the Cold War and an overall reduction in the risk of nuclear war turned, in part, on a willingness by the U.S. and U.S.S.R. to scale back their respective weapons programs. Ideally, this AI era will skip the build-out phase and start with a default of deescalation. This approach would steer valuable, finite resources away from the continual build-out of ever more sophisticated AI-based weapons and lower the odds of global tensions from reaching a boiling point.

Third, to the extent AI informs weapons systems and other national security aims, the government should rely only on thoroughly vetted models. The NSM instead calls for the government to develop and deploy leading AI models. Frontier AI models contain many unknown unknowns. As discussed by Ashley Deeks, AI weapons systems may interact with one another in unpredictable and uncontrollable ways. Advanced AI weapons systems may also “go rogue” and commit unauthorized attacks. Commitment to using only rigorously tested, perhaps slightly less advanced models would help prevent such outcomes.

The path forward for U.S. AI policy requires a shift away from unchecked militarization and toward applications that maximize public welfare. While the NSM’s objectives underscore the need for American leadership in AI, focusing on AI primarily for defense risks escalating tensions and consolidating power within a small circle of influential tech companies. Lessons from the Cold War remind us that relentless arms races come with severe risks—ones that cannot be mitigated by superficial nods to civil liberties. Instead, the next administration should prioritize competitive markets, peaceful applications, and rigorous vetting of AI systems before deployment. By emphasizing these principles, the U.S. can harness AI’s transformative potential responsibly. The ultimate measure of AI policy success lies not in military supremacy but in achieving a safer, more innovative, and more prosperous society.

.png?sfvrsn=94f97f2c_3)