Dangers Posed by Evidentiary Software—and What to Do About It

It's well known the code is buggy; that's why software updates for anything from apps to operating systems are now the norm. But if the public understands this, the courts have not followed suit.

Published by The Lawfare Institute

in Cooperation With

It's well known the code is buggy; that's why software updates for anything from apps to operating systems are now the norm. But if the public understands this, the courts have not followed suit.

In the UK, there was a major long-running scandal involving the Post Office Limited (POL)—not to be confused with the government-owned Royal Mail, which delivers the mail—and its many-year pursuit of "subpostmasters" whom it accused of theft. The evidence brought against the accused came from discrepancies in the software accounting system. In thousands of cases, some involving quite large sums of money, the central accounts didn’t match the subpostmasters accounts, and the POL accused thousands of the subpostmasters of stealing funds. The accused had little recourse in the legal proceedings; since 1997, the UK Law Commission recommended that a computer should be assumed to be operating properly unless there is explicit evidence otherwise (a claim by a defendant that there was an error doesn't count). In the U.S., the situation is somewhat different. U.S. defendants can request access to the evidence, but there is, as Judge Stephen Smith has detailed, increasing deference in federal courts to so-called "law enforcement privilege"—the withholding of information about evidence-gathering techniques during a trial—can extend to software and prevent its examination for errors.

The actions in both nations are worth examining. The miscarriage of justice in the UK situation is striking in and of itself. The POL may be the nation's largest retailer, and yet it prosecuted its employees while its own software was at fault. The U.S. situation is of interest, too; U.S. courts are becoming aware of the issue of software fallibility in part because of some work my colleagues and I have done. Together, the examples in the UK and U.S. context underscore the dangers posed by relying on software—DNA testing, computer forensic analysis and more—as a witness; and the ways in which courts can accomodate for the problem.

The POL, an English and Welsh firm, is a big business with 17,000 branches. In addition to selling stamps and shipping packages, branches provide bank transfers, sell lottery tickets and the like. Small branches of the post office—sub-post offices—are run by self-employed sub-post officers. Such small firms typically thrive by personal contact, and so, not surprisingly, the subpostmasters (SPMs) form part of the fabric of their communities.

From 2000-2014, the POL found shortfalls in many of the SPMs' accounts. Some accounts were off by hundreds of pounds, others by thousands. The SPMs could find no reason for the claimed shortfalls—and were given no explanation by the POL. In actuality, there were multiple different types of software synchronization errors occurring in the software. A typical type of problem is that a transaction at a subpost office would register locally but not within the central system.

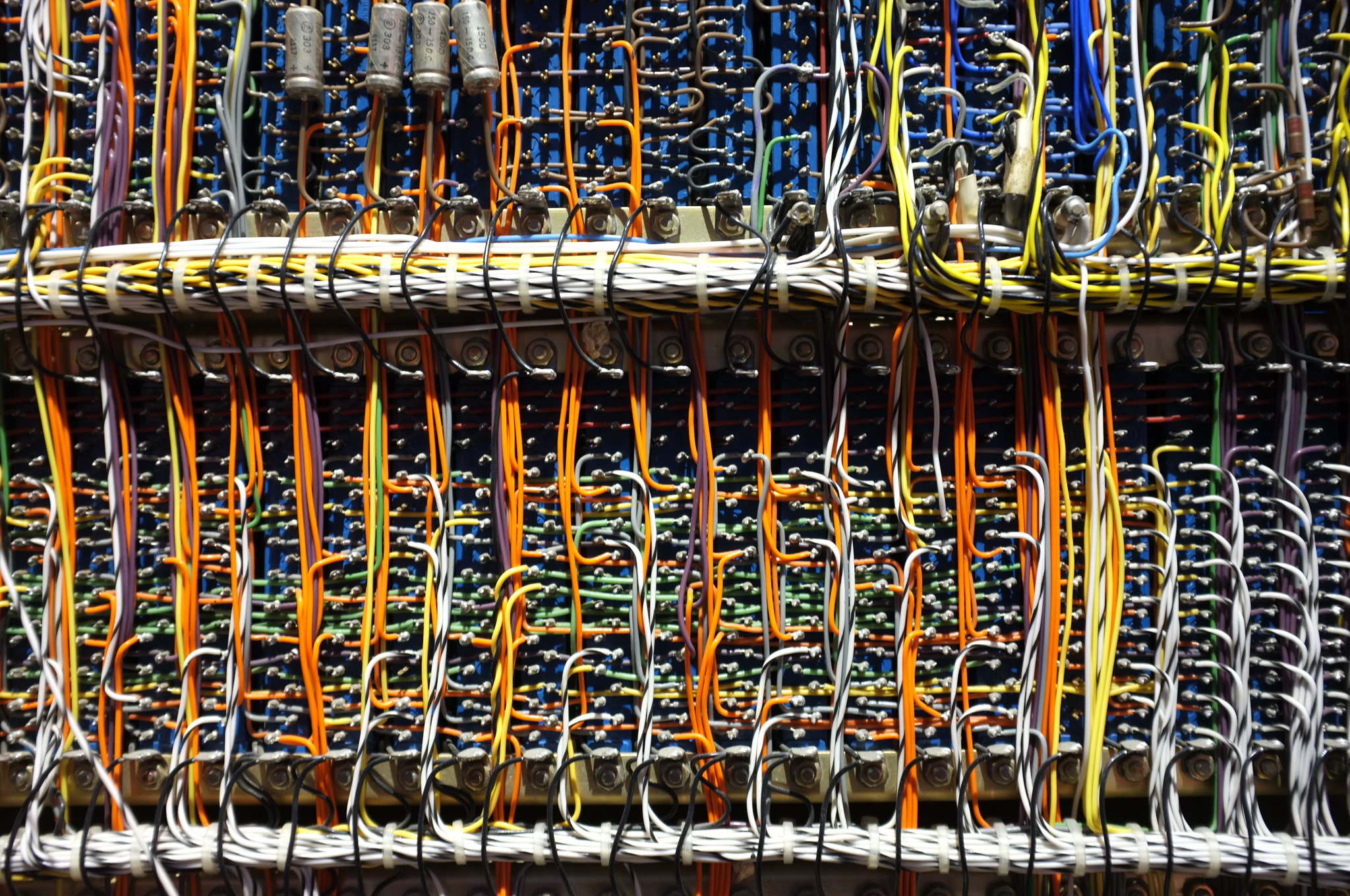

The problems began in 1999, when the Post Office adopted a highly distributed computing system, Horizon IT. When the SPMs complained that the Horizon central accounts were in error, there was some effort at investigation, but the POL internal probe was lax in many regards. The POL did not always check the audit logs to determine what had occured. At times Horizon IT personnel would simply "attribute some problems which they could not understand or resolve to SPM error."

For the SPMs, the problems were wrenching. A peculiarity—at least to US eyes—is that in the English legal system, certain private entities can serve as prosecutors. The POL brought cases against 918 SPMs for theft of funds. There was little recourse for the SPMs, many of whom went to prison. Some lost their homes and pensions. Others went bankrupt as they paid off the POL claims. And they were effectively unable to defend themselves. They had no insight as to the cause of the "missing" funds and little help from the POL in tracking what had actually occurred.

In fact, many of the SPM account shortfalls had nothing to do with theft from the till; they were the result of buggy software. But the SPMs weren't privy to information about software problems. Indeed, until 2018, the POL withheld information about the Known Error Log—a database of known errors and workarounds in the IT system maintained by Fujitsu, Horizon’s manufacturer— from the SPMs and the courts. The problems with the software were only uncovered after the SPMs brought a civil case against the POL.

The civil suit was heard in the Queen's Bench Division of the High Court of Justice. After extensive investigation, in December 2019 the Honorable Mr. Justice Fraser ruled, "It was possible for bugs, errors or defects ... to cause apparent or alleged discrepancies or shortfalls relating to Subpostmasters’ branch accounts or transactions .... all the evidence in the Horizon Issues trial shows not only was there the potential for this to occur, but it actually has happened, and on numerous occasions." What had happened repeatedly, and in a variety of different circumstances, is that sometimes the Horizon software showed transactions occurring that had not happened (the effort stopped partway through) and other times completed transactions incorrectly.

The Criminal Cases Review Commission (CCRC), an independent board that considers cases of miscarriage of justice in England, Wales and Northern Ireland, began investigating the issue in 2015. The CCRC can refer cases to appeal only if new evidence has come to light that might overturn a conviction. The bugs, errors and defects that had been uncovered constituted such a reason, and the CCRC referred multiple SPM cases to the Court of Appeals and the Crown Court.

The CCRC received 61 applications from convicted SPMs; by January 2021, the Review Commission had referred 51 of these to appropriate appeals courts. Six convictions were overturned and the Post Office indicated it would not oppose another 38 of the appeals.

As the postal case was wending its way through the courts in 2020, four experts in the reliability of software-based systems—Peter Ladkin, Bev Littlewood, Harold Thimbleby and Martyn Thomas— wrote of the case, "[F]or any moderately complex software-based computer system, such as the IT transaction-processing system Horizon ... it is a practical impossibility to develop such a system so that the correctness of every software operation is provable to the relevant standard in legal proceedings." The scientists proposed a reversal of the Law Commission's 1997 recommendation—in other words, they recommended that courts should presume any software system has errors that make it likely to fail at times. This shifts the relevant question before a court, “how likely is it that particular evidence has been materially affected by computer error?”

The software engineers proposed a three-part test. First, the court should have access to the "Known Error Log," which should be part of any professionally developed software project. Next the court should consider whether the evidence being presented could be materially affected by a software error. Ladkin and his co-authors noted that a chain of emails back and forth are unlikely to have such an error, but the time that a software tool logs when an application was used could easily be incorrect. Finally, the reliability experts recommended seeing whether the code adheres to an industry standard used in an non-computerized version of the task (e.g., bookkeepers always record every transaction, and thus so should bookkeeping software).

This group—along with well-known lawyers, Paul Marshall and Stephen Mason, an academic criminal lawyer, Jonathan Rogers, a software testing and auditing expert, James Christie, and a statistician, Martin Newby—adapted for the public advice that had been requested by the UK Ministry of Justice; this included, "In principle, the threshold for rebutting the presumption so that the onus of proof is upon the party relying upon a document to prove it, and thus prove the integrity and reliability of its computer source (where otherwise hearsay), is low." This was not a standard that had applied in the POL cases. The authors blasted the POL for not revealing the existence of the Known Error Log during court proceedings and concluded that "every significant program will contain many bugs that will cause it to fail to produce correct results when some particular combination of input data is encountered."

Meanwhile in the U.S. context, Steven Bellovin, Matt Blaze, Brian Owsley and I were examining the same concern from a U.S. perspective. Our approach was somewhat different from our UK counterparts for several reasons. On the legal side, there was no U.S. version of the 1997 Law Commission recommendation presuming the correctness of computer output, but there was the increasing problem of law-enforcement privilege. We focused on the consequence of "evidentiary software" (computer software used for producing evidence) when the complex systems (the operating system, software and hardware) fail, and in fact, fail often.

Inanimate objects have long served as evidence in courts of law: the door handle with a fingerprint, the glove found at a murder scene, the Breathalyzer result that shows a blood alcohol level three times the legal limit. But the last of those examples is substantively different from the other two. Data from a Breathalyzer is not the physical entity itself, but rather a software calculation of the level of alcohol in the breath of a potentially drunk driver. As long as the breath sample has been preserved, one can always go back and retest it on a different device.

What happens if the software makes an error and there is no sample to check or if the software itself produces the evidence? At the time of our writing the article on the use of software as evidence, there was no overriding requirement that law enforcement provide a defendant with the code so that they might examine it themselves.

There have been an ample number of cases demonstrating the appropriateness of such a step. In a case concerning Breathalyzers, State v. Jane Chun et al., outside examiners of the software found "thousands" of software errors in the code the Breathalyzer was using. And it's not just commercial software that can go wrong. Errors similarly allowed misconfiguration of FBI wiretapping equipment, allowing overcollection. In 2000, when the FBI unit investigating Osama bin Laden misconfigured the wiretapping gear and overcollected, the technician was so upset by the fact that the FBI had wiretapped people without authorization that he destroyed all the collected material, including that on bin Laden. In a 2014 U.S. court case, computer forensics expert Jonathan Zdziarski discovered that the computer forensic tools—software—"used to initially evaluate the evidence ... had either misreported key evidence, or failed to acknowledge its existence entirely." The commercial tools were misreporting of a device erasure and of a backup restore, dates when an application was used and times a file was accessed. The most serious charges were dropped as a result of Zdzairski's testimony.

What legal recourse is possible? In the cases just described, the software acts as a witness. The Confrontation Clause of the Sixth Amendment gives criminal defendants the right to know the nature of the evidence against them, and the Supreme Court has ruled that defendants have the the right to confront those who testify against them, "Testimonial statements of witnesses absent from trial have been admitted only where the declarant is unavailable, and only where the defendant has had a prior opportunity to cross-examine."

Such rights have run into the problem of proprietary software. And here, the issue of law-enforcement privilege comes in. Since 1963, as a result of the Supreme Court ruling in Brady v. Maryland, the prosecution must provide the defendant with all exculpatory evidence in their possession. But as Smith showed, federal courts are increasingly recognizing evidentiary privilege protecting law-enforcement investigatory techniques and procedures from being revealed in court. The situation is made worse by the fact that sometimes law enforcement doesn't actually have access to the software; the code is proprietary and law enforcement only receives the output (this is what happened, for example, in the Apple/FBI case over the locked San Bernardino phone). When law enforcement can’t get access to the software, then the defendant doesn't get access—and is thus unable to determine if the software had errors.

Over the last several decades researchers have become better at producing safety-critical software. DARPA, for example, developed an "essentially" unhackable helicopter whose communications channel withstood six weeks of serious red-team hacking. But run-of-the-mill software is much more error prone; CMU researcher Watts Humphrey reported that, on average, an experienced software developer introduces an error every ten lines of code. The best did much better; the top 20% of programmers produced 29 errors per thousand lines of code. Yet a Breathalyzer might have fifty to one hundred thousand lines of code, while complex programs, such as the Horizon system, are larger by several orders of magnitude.

Any responsible software provider tests software extensively to see if it functions correctly before releasing it. Sometimes errors can be relatively easily found. The recent outage by a cloud service provider occurred when a single customer updated their settings in June after the company had released revised code in May. Pre-release testing hadn't uncovered the problem. Neither had the first month of usage. But the particular settings of that customer triggered the issue—and large parts of the Internet were unreachable for an hour or more.

Unfortunately testing, no matter how thorough, doesn't always turn up the problems. A misplaced "break" statement in a line of code in the AT&T switching system led to a widespread outage a month after a 1989 software update. The AT&T engineers hadn't even imagined this situation prior to deployment, and so they hadn't tested for this condition.

Another example of where testing wouldn’t show the problem occurred with a version of Telnet, a protocol for communicating with a remote device, that provided encryption. Instead of generating a new encryption key for each session, almost all the time the key used was all 0's. But looking at the results of the code would show encrypted communications—and because of the use of different "initialization vectors," testing wouldn't reveal that all the communications were encrypted with the same key. In other words, mistakes happen with software and sometimes the only way to find errors is to study the code itself—both of which have important implications for courtroom use of software programs.

Given the high rate of bugs in complex software systems, my colleagues and I concluded that when computer programs produce the evidence, courts cannot assume that the evidentiary software is reliable. Instead the prosecution must make the code available for an "adversarial audit" by the defendant's experts. And to avoid problems in which the government doesn't have the code, government procurement contracts must include delivery of source code—code that is more-or-less readable by people—for every version of the code or device.

Our work has already had an impact. In a New Jersey Appeals Court decision regarding a novel DNA analysis used in a murder case, Corey Pickett was accused of being one of two gunmen who shot into a crowd, killing one man and injuring a young girl. The two men were caught after a chase on foot; shortly afterwards police found a .38 caliber gun and a ski hat on the path they had taken. Traditional DNA analysis did not tie Pickett to the evidence, so law enforcement used TrueAllele DNA software to do a match. The TrueAllele product claims to do "probabilistic genotyping” that comes into play when investigators have DNA samples from several people. But, as the court noted, a recent study by the President's Council of Advisors in Science and Technology described the technology as still being developed and requiring "close scrutiny."

TrueAllele has not undergone this type of close scrutiny. Even if the science behind it had, the software implementing it needed to be closely examined as well. Emphasizing the points we had made regarding the need for adversarial examination of code, the court ruled, "Hiding the source code is not the answer. The solution is producing it under a protective order. Doing so safeguards the company's intellectual property rights and defendant's constitutional liberty interest alike. Intellectual property law aims to prevent business competitors from stealing confidential commercial information in the marketplace. It was never meant to justify concealing relevant information from parties to a criminal prosecution." The decision is binding in state courts in New Jersey.

More and more, lawyers on both sides of the Atlantic are recognizing the importance of ensuring the reliability of software for use in court. In early June, the UK barrister Paul Marshall laid out the various ways that the Post Office Limited—and, indeed, the UK government—had failed the SPMs, with the POL essentially betting its rich coffers against the ability of the SPMs to successfully win against it. Marshall’s speech is well worth the read for anyone interested in ethics, the law and government.

As for trusting computer code, Marshall reminded his audience that the software was the only evidence against the SPMs, "If you remember only one thing from this talk, bear in mind that writing on a bit of paper in evidence is only marks on a piece of paper until first, someone explains what it means and, second, if it is a statement of fact, someone proves the truth of that fact." If you're looking at computer software providing evidence, then the software must be available for cross-examination—just as it would be for any witness. In the US, anything less obviates the legal protections of due process and the confrontation clause. It results in conviction through mistakes, not facts.