The Facebook Oversight Board Should Review Trump’s Suspension

Checks and balances don’t exist only for decisions people agree with. Facebook should allow oversight of its most high-profile content moderation decision yet.

Published by The Lawfare Institute

in Cooperation With

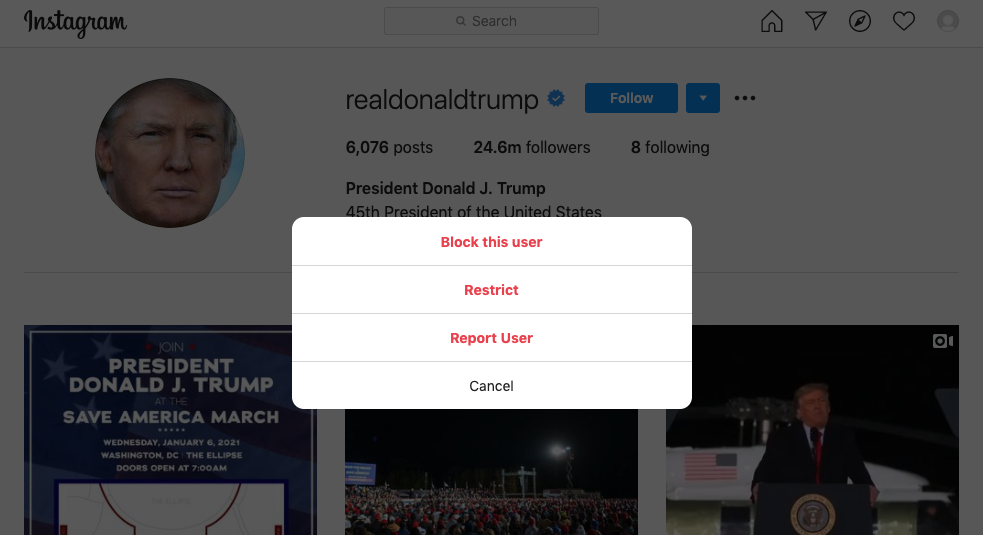

While Congress works out what form of accountability it will impose on President Trump for inciting insurrection at the U.S. Capitol last week, the president has faced a swift and brutal reckoning online. Snapchat, Twitch, Shopify, email providers and payment processors, among others, have all cut ties with Trump or his campaign. And after years of resisting calls to do so, both Facebook and Twitter have suspended Trump’s accounts.

This Great Deplatforming has ignited a raucous debate about free speech and censorship, and prompted questions about the true reasons behind the bans—including whether they stem from political bias or commercial self-interest rather than any kind of principle. Luckily, at least one platform has built a mechanism that is intended to allay exactly these concerns. Facebook should refer its decision to suspend Donald Trump’s account to the Oversight Board for review.

Ever since Facebook announced the launch of its Oversight Board in May, there have been constant questions about the board’s activities—or lack thereof. Researchers and journalists wanted to know why the board wasn’t weighing in on Facebook’s most controversial decisions and why the board wouldn’t be operational in time for the 2020 U.S. elections. One group of activists launched what it called “The Real Facebook Oversight Board” in response to the inaction of the official board. But now, in the wake of Facebook’s most high-profile and controversial content moderation decision in its history—the “indefinite” suspension of the account of the sitting president of the United States—the board can and should have a chance to prove its value.

The whole point of the board is, in the words of Facebook’s CEO Mark Zuckerberg, that “Facebook should not make so many important decisions about free expression and safety on our own.” Its job is to weigh in on the platform’s “most difficult and significant content decisions.” Suspending the account of the leader of the free world more than fits this bill; or at the very least, it should be left to Facebook to decide it doesn’t.

To explain why Facebook still holds the reins in deciding whether the board can weigh in here requires some background. It has taken longer than many expected for the board to become operational, and it is disappointing it wasn’t ready to go before now. Still, it takes time for what will hopefully be an enduring institution to kick into gear, especially during a pandemic (and no, it was never because they just didn’t have laptops). Regardless, the board is now up and running, and in December it announced the first batch of cases it is currently deliberating over. Users can submit appeals in cases where Facebook has taken content down on Facebook or Instagram.

However, Trump cannot appeal his profile’s suspension to the board directly. As I have repeatedly—and, if I’m being honest, monotonously—written about, the board’s limited “jurisdiction” is the biggest disappointment in the process of its establishment so far. At this stage, users can only appeal cases in which their content is taken down by Facebook, and not cases where Facebook leaves disputed content up. I will spare you yet another explanation of why this is inadequate and only note that I’m glad Facebook has committed to rectifying this design flaw soon.

But isn’t the decision to suspend Trump’s account a take-down decision? You’d think so—and yet it’s not. The answer to this question only further highlights the extreme narrowness of the board’s current remit. Because Facebook has decided to suspend Trump’s entire account, and not merely remove individual pieces of content the president has posted, the suspension does not meet the criteria currently set out in the board’s bylaws for appeals.

All hope is not lost, however. Facebook can, on its own initiative, refer cases to the board for review. Indeed, two out of the six cases currently being considered by the board are Facebook referrals. (Neither Facebook nor the board has said how many cases were referred by Facebook in total.) There is even a process for “Expedited Review” for “exceptional circumstances” under Article 2, Section 2.1.2 of the bylaws. In these cases, the board has 30 days to determine whether to let a decision by Facebook stand. If the decision to suspend “indefinitely” the account of the president of the United States on the basis that he incited insurrection and may continue to do so is not “exceptional,” then I am not sure what would be.

Facebook clearly has the power to send this case to the board, so the only question is whether it should. The answer is clearly yes. The decision is extremely controversial, polarizing and an exercise of awesome power. Facebook itself admits it was not an easy call. It is one Facebook resisted making for years—and, in announcing the decision, Zuckerberg noted the competing interests in public access to political speech and safety risks, given the possibility of further incitement to violence. Before the complete account ban, another Facebook executive defended a decision to remove a video message posted by the president the day after the Capitol riot on the grounds that the country was in an “emergency situation” and “on balance” the video contributed to rather than diminished the risk of ongoing violence. These were not cut-and-dried calls.

The problem for Facebook is that the posts from the president the past week were arguably no worse than many he has posted before. What is different, however, is the political context, with the incoming presidency and Congress—that is, Facebook’s next regulators—now being controlled by Democrats. Whether Facebook’s decision is right or not, these factors raise doubts about the legitimacy of its decision and whether it was taken in the public interest or in the interests of Facebook. This is exactly the problem the Oversight Board was created to address: The board is an independent check and balance, meant to ensure the public interest has a voice in Facebook’s content moderation. For this reason, the lack of visible public pressure is no reason not to refer a decision. Oversight over only unpopular decisions or decisions the majority are unhappy about is not meaningful oversight at all.

The strongest argument against referral is that the president’s account, and the events of the past week, were so sui generis that the case raises no generalizable questions. Therefore, in this view, it’s not worth the board spending its time or institutional capital to weigh in on a debate that has no future application. That argument has some merit but, on balance, is not convincing.

Unfortunately, this is not the first time a political leader has used the platform to incite violence—nor will it be the last. Trump may be out of power soon, but Facebook won’t be, and the platform will be repeatedly confronted with the question of how to balance the public interest in holding leaders to account with the safety interests imperiled by leaving up certain content.

This decision also raises an important question of how much Facebook should consider political and social context outside the platform in making its content moderation decisions. Clearly what cemented Facebook’s decision was not the content Trump posted on Facebook, but the events at the Capitol, his speeches through other media, how people responded on other social media platforms such as the Trump-friendly Gab and Parler, and the extent to which this made Trump’s Facebook posts—again, no more objectionable than many of his posts—function as dog whistles to his supporters in a volatile environment. It is ridiculous to make content moderation decisions in a vacuum without looking to surrounding context. And yet this is often what platforms have done, because looking beyond their services raises some thorny questions. How far and where should they look, and are they really best placed to make these social and political calls? These questions about Facebook’s competency are even more acute in markets other than America, where Facebook is a foreign corporation. This is a fundamental and enduring issue the board can give Facebook guidance on.

Finally, there’s the issue of remedy. Zuckerberg said that Facebook will continue the block on the president’s accounts “indefinitely and for at least the next two weeks until the peaceful transition of power is complete.” This is an unusual remedy—I know of no case where Facebook has taken this approach of banning an account for an unspecified length of time before—and one that creates a great deal of uncertainty. Whether Trump will get his account back, in other words, appears to depend on how Zuckerberg feels after Biden’s inauguration. The Oversight Board might have thoughts about whether this is a legitimate way to resolve the years of sparring between Facebook and its most famous troll.

The Oversight Board was created to be a check and balance on Facebook’s decision-making processes. The goal to give Facebook’s decisions legitimacy, and make them more than just “Mark decides.” This is just as true, if not more so, when Mark decides with the winds of public opinion and political convenience as when he doesn’t.

Russian dissident Alexey Navalny captured the uneasiness some observers feel in the wake of the Great Deplatforming in a tweet thread calling Twitter’s ban of @realDonaldTrump an “unacceptable act of censorship.” Navalny argued that the decision was “based on emotions and personal political preferences” and “will be exploited by the enemies of freedom of speech around the world.” He concluded by saying, “If @twitter and @jack want to do things right, they need to create some sort of a committee that can make such decisions. We need to know the names of the members of this committee, understand how it works, how its members vote and how we can appeal against their decisions.”

Facebook has exactly this kind of committee ready to go, for exactly this situation. It should use it.

.jpg?sfvrsn=8588c21_5)

-final.png?sfvrsn=b70826ae_3)