Standards of Care and Safe Harbors in Software Liability: A Primer

Deciphering the Biden administration’s nascent software liability efforts.

Published by The Lawfare Institute

in Cooperation With

0372b175-857d-405a-bcd8-750258475415.png?sfvrsn=7ae0bb91_3) The National Cybersecurity Strategy (NCS) and NCS Implementation Plan attempt to reshape the laws governing software liability. Both proposals rely on creating a “standard of care” and a “safe harbor” against liability. But what do both of these terms mean, exactly? And why are they important?

The National Cybersecurity Strategy (NCS) and NCS Implementation Plan attempt to reshape the laws governing software liability. Both proposals rely on creating a “standard of care” and a “safe harbor” against liability. But what do both of these terms mean, exactly? And why are they important?

Standard of Care

Simply put, software will never be perfect. Efforts to develop bug-free software are complicated by many factors, including the complexity of today’s software, the fact that even highly skilled developers make coding errors, and the fact that unexpected inputs and interactions between software programs can lead to bugs. So, for software developers and policymakers, the question then becomes, “How good is good enough”? In law, a “standard of care” determines what constitutes acceptable, nonnegligent conduct. Meeting the standard of care normally means that the defendant will not be liable, even if their behavior causes harm: Accidents happen even to cautious drivers, for example. Just because software is hacked does not necessarily mean that its creator or distributor should be liable. A company can do everything right and still be vulnerable to hacking (for example, by nation-state-sponsored threat actors and other sophisticated adversaries). What’s more, a standard of care does not need to be equivalent to optimal care. In the medical context, for example, doctors are not required to provide the best care possible, nor are they held liable every time there is a bad outcome; rather, the standard of care for health care providers set by most states is “that level of care, skill and treatment which, in light of all relevant surrounding circumstances, is recognized as acceptable and appropriate by reasonably prudent similar health care providers.” Similarly, in the software liability context, a standard of care could simply require the level of diligence that a reasonably prudent software designer, programmer, or tester should exercise in the same or similar circumstances. For example, the software community generally agrees that using hard-coded passwords is a design and programming choice that no reasonable software engineer would make. Failing to meet such a standard constitutes negligence and can result in being responsible (that is, liable) for damages that arise from the failure.

The tremendous diversity of the software market makes it challenging to adopt a one-size-fits-all approach to software security. At present, there is no single, generally accepted standard for securing the diverse array of software products and services. To manage this diversity, one could imagine at least three different types of standards.

First, one could simply require that software developers “act reasonably.” The key benefit of this approach is flexibility. A “reasonableness” standard can be applied broadly to software developers of different sizes and sophistication producing software intended for use in a wide variety of environments with varied cyberthreat profiles. The allure of such an approach is that the same basic “reasonableness” concept could apply differently based on the context and therefore could be highly responsive to localized industry characteristics. (This leaves open the very difficult questions of defining the relevant industry and, as such, which standard applies.) Moreover, litigating the question of whether software developers’ design and implementation is negligent will also serve an important information-forcing function, which is particularly useful as such information ordinarily is both expensive and difficult to acquire.

However, standards based on “reasonableness” can be uncertain and unpredictable, leaving software developers unclear as to whether a particular course of action will be deemed “reasonable.” In the face of uncertainty, software developers could “play it safe” to avoid liability, potentially leading to overcompliance. Alternatively, some might take a chance and act less carefully, knowing that there is a possibility of escaping liability under a reasonableness inquiry, thereby leading to under-compliance. Accordingly, uncertainty can lead to over- or under-compliance, depending on the circumstances. This is a key point: Error costs are inherent in a standards-based negligence regime and will be attributable to both Type I (false positive) and Type II (false negative) errors. Software developers may not have sufficient guidance to predict their potential liability and accurately assess risk on the front end, resulting in either over- or under-compliance. To address this concern, a standard of care could incorporate guideposts for software developers, effectively laying out considerations that a regulator or judge will consider in evaluating liability. The fair use doctrine in copyright law offers an example of such an approach, specifying four factors for determining whether something is fair use and offering some fairly concrete requirements as subsets of those four factors. Policymakers could take the same approach to defining reasonableness standards in the context of software development. Identifying a certain number of key factors to be considered in the reasonableness determination would serve as a guide to developers: The more the code complies with the relevant factors, the less likely it will face liability.

Alternatively, a standard of care could be rooted in products liability law. Specifically, under the “design defect” prong of products liability law,the essential question would be “whether—in light of knowable risks and feasible alternatives—a different design could have and should have been selected.” By focusing “on whether the product’s design is safe, not on what steps the manufacturer has or has not taken,” products liability law is “future oriented and requires companies to invest in better practices to address security risks, including risks that are unknown but reasonably discoverable,” according to Chinmayi Sharma and Benjamin Zipursky, two prominent legal scholars who have proposed using products liability law as the foundation for a new software liability regime. As Sharma and Zipursky explain, a key characteristic of this approach is that neither compliance with industry standards nor a record of diligent efforts will necessarily immunize defendants from liability. This approach, however, is not without drawbacks, many of which stem from the highly fact-specific nature of products liability litigation, which can render it both time-consuming and expensive. For example, some jurisdictions evaluate “design defect” claims using a “risk-utility” test, which requires courts to retrospectively assess whether it was practically and economically feasible for the manufacturer to adopt a safer alternative design. Such assessments are fact specific and expensive, requiring a considerable amount of information (such as which alternative designs were available and what their cost structures were).

Regardless of the approach, certain questions must be considered—for example: What standards should a product meet before going to market? What is the duty of a vendor to provide security support for a product after it goes to market? How long should a vendor be expected to provide support? What happens at software end-of-life? Absent end-user liability (as a matter of policy, responsibility will not be placed on the end user or open-source developer of a component that is integrated into a commercial product, per NCS Strategic Objective 3.3), what can be done to drive obsolete code from use?

In selecting a standard, policymakers also might consider its impact on competition and innovation (which standard promotes more of each) and who will develop and promulgate the standard. And, ultimately, the big question is who is liable—the code writer, tester, and/or designer; the enterprise implementing the code; or some combination thereof. Failures can occur at many points. To take a few simple examples, a software developer could design faulty software; a quality engineer could fail to adequately test code; or a user could improperly configure or fail to patch software. Today’s end-user license agreements largely place liability on end users, but a new liability regime could bar such disclaimers (except, perhaps, in cases where we might wish to allow sophisticated users to reallocate risk) and establish properly designed standards of care that could more efficiently allocate liability among software developers, integrators, end users, and consumers.

Safe Harbor

Policymakers are cognizant that the risk of liability, if too great, could serve as a bar to market entry and cause innovators to abandon the market. A significant challenge for those working to raise the bar on software security is to “design a regime that incentivizes software providers to improve the security of their products without crippling the software industry or unacceptably burdening [the] economic development and technological innovation” essential to U.S. global technological leadership, economic prosperity, and national security.

This is where safe harbors come in. Recognizing that no software is 100 percent secure, and that harm can result even when software developers satisfy the most stringent requirements, the NCS explains:

the Administration will drive the development of an adaptable safe harbor framework to shield from liability companies that securely develop and maintain their software products and services. This safe harbor will draw from current best practices for secure software development, such as the NIST Secure Software Development Framework. It also must evolve over time, incorporating new tools for secure software development, software transparency, and vulnerability discovery.

A safe harbor is a legal provision that provides protection from legal liability when certain conditions are met. For example, safe harbors could be used to protect physicians from liability risk when they provide care that follows approved clinical practice standards. By defining the conditions under which companies are protected from liability with a high degree of certainty, safe harbors can reduce the need for lengthy and expensive “battles of experts” over what constitutes reasonable (nonnegligent) conduct. This is particularly important in the context of software.

In an ideal world, safe harbors will have four essential features—first, they will offer greater certainty and lower risk to regulated entities. A clearly defined safe harbor will allow software developers to do their job with the relevant safe harbor requirements in mind and will reduce uncertainty by precluding a court or jury from applying a different set of requirements after the fact. Compliance also protects against liability and lets developers avoid costly litigation over whether they met the relevant standard of care.

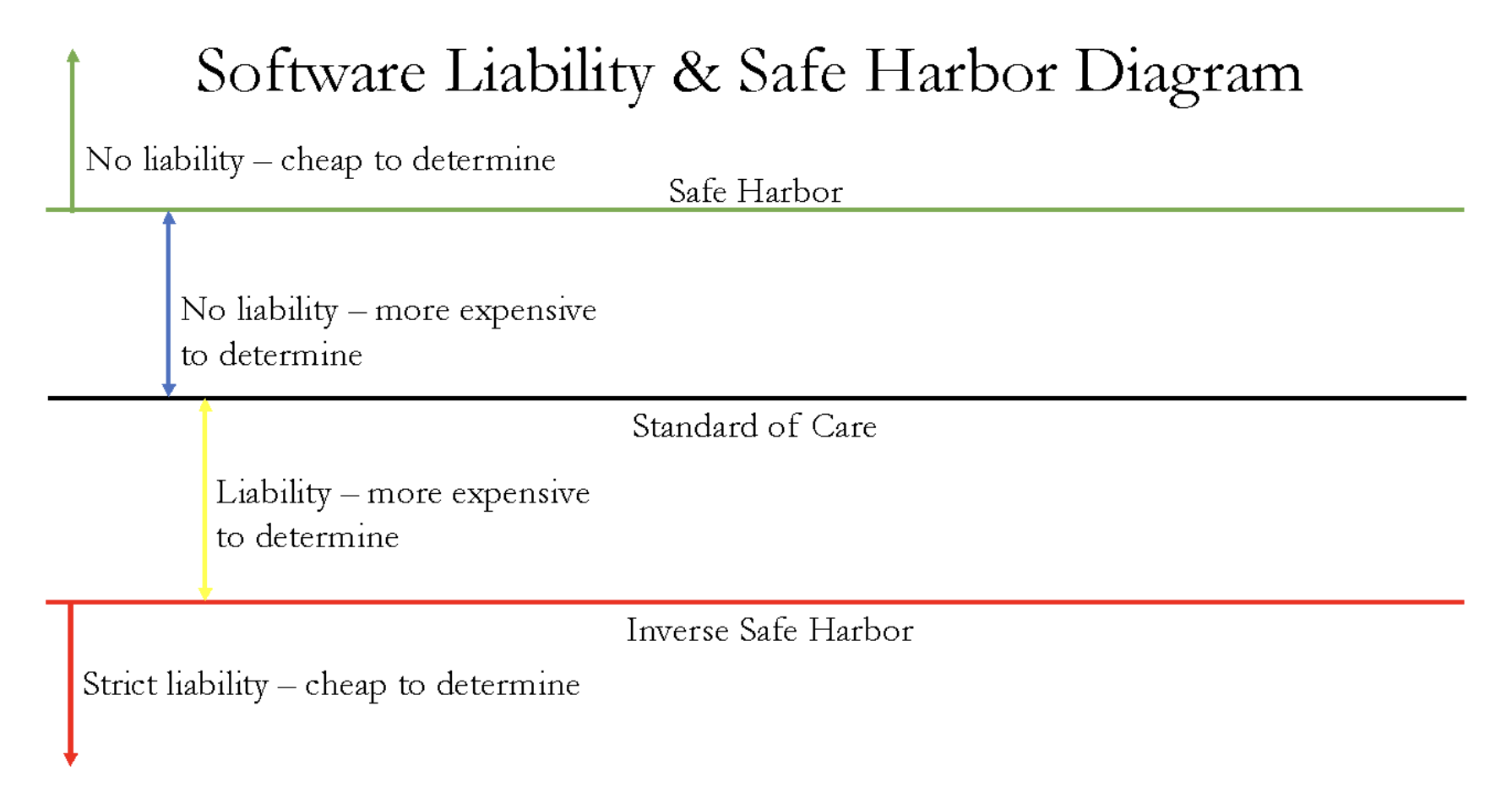

Second, a well-designed safe harbor will create incentives, in the form of protection from legal liability, for software developers to adopt best practices and, perhaps through an “inverse safe harbor” (which would impose automatic liability on software developers engaged in defined worst practices), avoid the worst ones.

Third, per the NCS, a good safe harbor definition will “draw from current best practices for secure software development .... It also must evolve over time, incorporating new tools for secure software development, software transparency, and vulnerability discovery.”

And fourth, when developing a safe harbor, one would know whether, and to what extent, the proposed requirements would be effective in securing software. However, quantifying software security is, to say the least, challenging. Various imperfect measurements of code quality currently exist, such as defect density (number of defects per lines of code), defect severity (how badly the bug affects the program’s functionality), and defect priority (the level of business importance assigned to the defect). Unfortunately, it is difficult even to determine the relevant baseline for comparison with these rough metrics, and good data is lacking regarding which behaviors improve code quality and by how much.

It is worth noting, however, that the absence of perfect security metrics need not paralyze efforts to improve software security. (In many other contexts, regulatory frameworks have been adopted in the absence of empirical data demonstrating their necessity. To this day, for example, it remains unsettled whether the patent system, adopted at the country’s founding, bolsters or deters innovation.) Policymakers can still identify “bad” practices to be avoided and good practices to be embraced. And all of this can be done without interfering with parallel efforts to improve metrics.

As the following graphic illustrates, safe harbors offer software developers the promise of legal certainty coupled with reduced costs by short-circuiting otherwise expensive litigation over whether a developer has met the relevant standard of care.

Defining safe harbors will not be an easy task. The software market is incredibly diverse, and different contexts may call for different types of safe harbors. To address the diversity of the software market, safe harbors could be crafted to differ by context or industry. For example, requirements for large cloud providers, entities with market power, or entities that provide software to key critical infrastructures could differ from those applied more generally. The downside is that with increased customization comes increased complexity. Lawmakers also will need to determine whether safe harbor compliance confers absolute immunity from liability, lesser protections (such as a rebuttable presumption of immunity), or graduated legal protections (that is, greater protections where a particular aspect of safe harbor compliance confers greater confidence in the security of the software). And, as with the standard of care, regulators will need to pay particular attention to the effect of any safe harbor on competition. Policymakers should take care to ensure that rules designed to enhance software security are not co-opted to limit competition. Incumbent firms with significant resources, for example, might advocate for costly but less effective requirements not because they will improve security, but because their expense will deter smaller firms from entering the market. Firms will have a strong incentive to meet or exceed safe harbor requirements for software development in order to avoid liability, so safe harbor requirements will effectively become a “floor.” However, safe harbor requirements also could become a “ceiling” if firms lack incentives to do more than the law requires because they cannot capture the added value that springs from their investment in additional precautions.

Lawmakers also will need to define a safe harbor compliance mechanism, with options ranging from self-attestation to third-party or government certification processes, such as those set forth in the Safety Act (which affords certified anti-terrorism products and services a presumption of immunity from tort claims arising out of an act of terrorism).

Regardless of the specific mechanism adopted, key challenges with ensuring safe harbor compliance include cost and scalability. More involved certification processes could confer greater confidence in the security of software, but complex certification schemes are resource intensive (which reduces their scalability) and can even become cost prohibitive (in this regard, the Department of Defense Cybersecurity Maturity Model Certification program serves as a cautionary tale).

***

Properly crafted standard of care rules and safe harbors have the potential to introduce more predictability and reliability into the litigation system while promoting better practices for achieving software security. Taken together, they could encourage adherence to quality-promoting practices and processes, making software more resilient and more secure.

-(1).png?sfvrsn=dd820f87_5)

.jpg?sfvrsn=bc558971_2)