The Rise and Fall of America’s Response to Foreign Election Meddling

Published by The Lawfare Institute

in Cooperation With

Over the past decade, U.S. institutions—from federal agencies to tech companies and civil society—worked to form a comprehensive shield against foreign interference in American politics. Over the past few weeks, the second Trump administration has turned itself to the task of undoing this project—setting America’s posture toward foreign meddling back, even as foreign adversaries continue to sharpen their tactics. And it has done so for transparently political reasons, offering little justification for this destruction beyond a desire for retribution.

Amidst the chaos of the current moment—a slew of executive actions, an ever-mounting pile of temporary restraining orders and injunctions in response, and the single-handed dismantling of agencies created by Congress—this story has received relatively little attention. But it’s worth watching closely. This rollback not only weakens America’s defenses, but telegraphs to U.S. adversaries that the country’s current leadership prioritizes appeasing a political base—one that it taught to dismiss foreign interference as a hoax—over protecting the country from real and ongoing threats. As in other policy areas, state capacity is now determined in response to conspiracy theories on X.

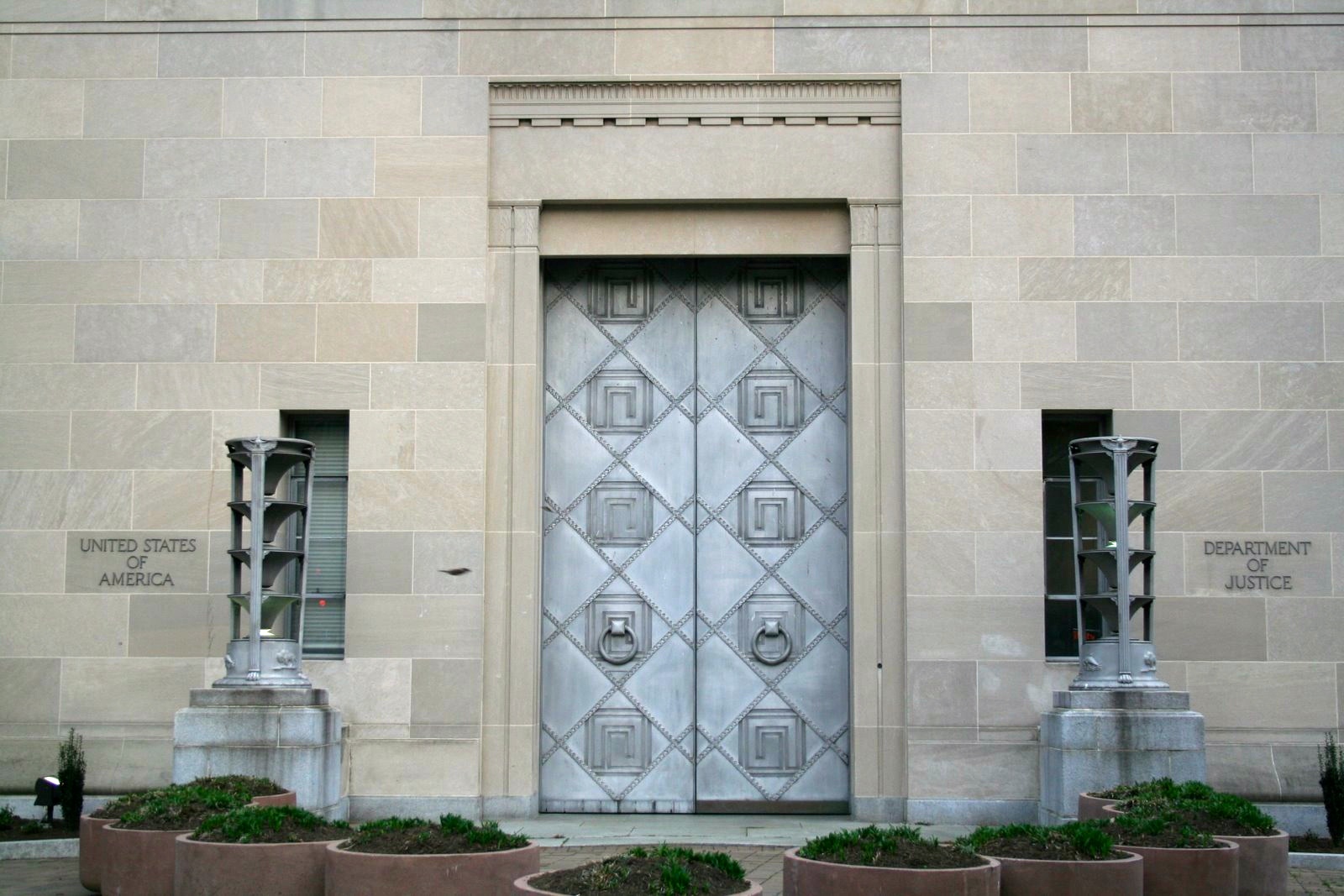

The assault on this infrastructure began even before Trump took office, but has accelerated in recent weeks. In December, Congress failed to extend funding for the State Department’s Global Engagement Center (GEC), whose mandate had charged it to identify and expose foreign propaganda efforts; it had become a frequent target of House Republicans and Elon Musk, who alleged it to be a tool of censorship. Then, Pam Bondi, Trump’s newly-confirmed attorney general, announced in a memo that the Justice Department was disbanding the FBI’s Foreign Influence Task Force (FITF)—a group of operators and analysts tasked with combating foreign malign influence operations targeting U.S. democratic institutions. Among its other responsibilities, FITF engaged with social media platforms, occasionally providing them with information and signals that they could use if they chose. Bondi also indicated that the department would roll back its recent use of the Foreign Agents Registration Act and its cousin statute, 18 USC 951, to respond to foreign interference. Within the Department of Homeland Security, employees at the Cybersecurity and Infrastructure Security Agency (CISA) working on countering foreign influence were placed on leave while the agency—another frequent target of House Republican censorship theories—“conducts an assessment” of “how it has executed” any work countering mis- and disinformation, as well as foreign influence operations, in prior elections.

This dismantling is all the more striking because of the urgency of the work being done. After all, in 2024, Trump’s own presidential campaign was targeted by hackers linked to the Iranian Revolutionary Guard Corp in an apparent hack-and-leak operation—and that was only one of the election interference efforts identified that year. But countering malign influence has fallen prey to a narrative embraced on the right, and by the new administration, that baselessly portrays concerns over foreign interference as a scam to steal presidential elections from Trump. To understand this dynamic, it’s useful to rewind the tape to take a look at why these programs were established in the first place—and how they became politicized.

From 2015 through 2017, the St. Petersburg-based Internet Research Agency and Russian military intelligence (known colloquially as the GRU) interfered in the presidential election and worked to deepen societal rifts in the United States. Despite recent attempts at revisionist history, the social media interference is an incontrovertible and well-documented fact. The discovery of this disinformation campaign jolted the United States Congress into recognizing that the U.S. needed to rebuild its capacity, atrophied since the end of the Cold War, to respond to the evolving threat of foreign malign influence. And so, the bipartisan 2016 Countering Foreign Propaganda and Disinformation Act tasked the State Department Global Engagement center—originally established to respond to the ISIS threat on social media—with countering foreign propaganda and disinformation directed at U.S. national security interests.

In the private sector, tech platforms—rattled by the realization that they’d been manipulated by foreign trolls masquerading as Real Americans—staffed up platform “integrity” teams. To find and disrupt coordinated networks of inauthentic bots and trolls, which targeted the right and left alike, the companies recruited people with expertise in investigations and analysis. (Some had previously worked for the FBI or intelligence agencies; this would later be reframed as evidence of some sort of “deep state takeover” of Big Tech.) Academia and the private sector responded as well. Research centers and companies emerged that attempted to detect bots and trolls via the public data visible to outsiders.

Initially, this work was frequently focused on Russia and its sustained determination to manipulate the American information environment. But as more adversarial actors picked up the playbook, researchers and platforms—at times working collaboratively—discovered many more operations. Over time, campaigns attributed to Saudi Arabia, China, Iran, and dozens of other countries were documented and disrupted. The goals—intimidating critics, seeding misleading narratives, drowning out dissent—varied according to the geopolitical power needs of the instigator.

Within the federal government, the capacity for detecting and responding to campaigns targeting the U.S. evolved as well. In autumn of 2017, the FBI established the Foreign Influence Task Force. In November 2018, the Cybersecurity and Infrastructure Security Agency was established within the Department of Homeland Security. And in December 2019, Congress established the Foreign Malign Influence Center within the Office of the Director of National Intelligence. At the time each of these centers was launched, the President of the United States was Donald J. Trump.

During this same period, Facebook, Twitter, and sometimes YouTube began to release reports, and sometimes data sets, documenting networks of inauthentic accounts linked to state actors or third-party mercenaries. Research centers like the Stanford Internet Observatory (SIO, where one of us worked), Graphika, and the Digital Forensic Research Lab (DFRLab) used the data sets to produce independent assessments of the disrupted operations. Everything was communicated transparently to the public. The goal was to help people around the world understand that disinformation campaigns run by deliberate manipulators were real, global, and that this was not a partisan issue. In one notable example, Graphika and SIO published a joint analysis of an influence operation run by the Pentagon.

The capacity had a real effect. Increased vigilance by platforms and researchers meant that malign influence networks were more frequently disrupted before amassing large followings. In Meta’s Adversarial Threat Reports, discussions of inauthentic network takedowns occasionally nodded to a government tip. Statements from FITF, CISA, and ODNI announced disruptions of operations targeting U.S. elections, or presented joint assessments of election integrity. The Justice Department increasingly used prosecutions under FARA and Section 951, along with other statutes, as a tool to name and shame the actors behind influence campaigns.

But the political ground began to shift around the 2020 election. Anticipating foreign interference, SIO, Graphika, and DFRLab, plus the University of Washington Center for an Informed Public, established the Election Integrity Partnership to track viral misleading narratives related to voting processes, or which sought to delegitimize the election. The foreign-focused government agencies, themselves continuing their mandate to track foreign propaganda, occasionally engaged with the researchers about suspected foreign interference.

Yet although Iran, Russia, and China did run influence operations, the most viral efforts to delegitimize the election—for example, via false claims that Dominion voting machines were rigged, or that Sharpie markers were given out to hinder ballot readability in pro-Trump districts—were primarily boosted by domestic influencers and by President Trump himself. The academic analysts of the Election Integrity Partnership examined the spread of viral claims and occasionally communicated with election officials, who could post accurate information about the situations, or platforms, who could decide whether to moderate as they saw fit (usually via labels). Meanwhile, CISA independently ran a “Rumor Control” website that offered authoritative information, reiterating after election day that the election had been secure; this would later get CISA’s head fired by tweet. Trump’s rejection of Russian election meddling in 2016 as a “hoax” had already placed discussions of foreign interference into a politically contentious realm for the right; following Trump’s 2020 loss and the riot of Jan. 6, they began vilifying efforts to counter propaganda writ large.

This rhetoric picked up in earnest after the 2022 midterms with the launch of Rep. Jim Jordan (R.-Ohio)’s Select Subcommittee on the Weaponization of the Federal Government, which was devoted to digging up instances of supposed misbehavior by agencies and civil society groups examining viral claims. A repetitive process began: innuendo-laden blog posts that made evidence-free allegations were laundered through right wing media and aligned influencers, generating outcry. Prominent ideologically-aligned writers were invited to testify before the Weaponization Committee. The targets—academic institutions, civil society organizations, nonprofits, companies, and government agencies—occasionally received subpoenas and requests for non-public testimonies following these public allegations. Some were sued by right-wing lawsuit mills such as America First Legal. Some were sued by Elon Musk. Those who were targeted often went silent due to concern about harassment or further legal risk. Meanwhile, sweeping classes of social media research, corporate ad-buying practices, and platform content moderation policies were reframed as “censorship.” Cooperation between platforms and researchers, or communication between stakeholders, was reframed as a cabal. Work on Russian propaganda was positioned as part of the “Russiagate hoax.” And the government teams tasked with ensuring safe elections and mitigating foreign interference - teams that had been led by Trump appointees during the 2020 election—were reclassified as deep state censors complicit in silencing patriotic Americans.

“Disinformation,” according to this theory, is a word that elites use to shut up people they don’t like. The cabal of academics and government operatives, and their media collaborators, had begun using it to set the stage for a “foreign-to-domestic switcheroo.” They’d brainwashed people into believing in Russian interference (or believing that it was a problem) in order to raise money and build networks to fight the Kremlin, when what they were really building was a “censorship industrial complex”. Once assembled, this “AI censorship death star superweapon” was pointed at its true target—right wing populists—just in time to purportedly steal the 2020 election from Trump.

These attacks were baseless, relying on innuendo rather than evidence. But they were repeated incessantly, and even before the 2024 election, they bore fruit. Lawsuits like the Murthy v. Missouri led the government to stop communicating with platforms even about hostile foreign actors, which Meta remarked on in a written adversarial threat report. Under pressure, the Stanford Internet Observatory closed down its election integrity work. Other research centers are fighting vexatious lawsuits or reorienting their work away from misinformation. Among the social media companies, Elon Musk’s X dismantled teams. In advance of November 2024, various government agencies and programs—including CISA and FITF—pulled back from the more collaborative approach they had adopted in 2020, holding technology companies and election workers at arms’ length.

That brings us to the present day. Under the new administration, the government efforts have now all been abruptly disbanded, with relatively little fanfare nor much in the way of explanation. According to NPR, CISA’s purge placed staffers who worked on combating foreign propaganda on leave in addition to “a team of 10 regional election security advisers.” Politico reports that at least one person now on leave never worked on information integrity issues but instead focused on protecting election infrastructure from cyberattacks. Likewise, according to Wired, the work on pause involves CISA’s collaboration with state and local election offices aiming at enhancing the election security. DHS Assistant Secretary Tricia McLaughlin told NBC that “CISA needs to refocus on its mission” and is “undertaking an evaluation of how it has executed its election security mission with a particular focus on any work related to mis-, dis-, and malinformation.” (The administration appears to have taken these actions separate from broader efforts to slash jobs across the government. On Feb. 20, a week after the dismantling of the infrastructure to counter election interference, Wired reported that aides from Elon Musk’s DOGE project had arrived at CISA.)

Little information is available about the Justice Department rollbacks. But it’s noteworthy that a July 2024 report from the Justice Department Office of Inspector General recommended that the department do more to counter foreign influence—not wind down its efforts altogether. The only explanation the Justice Department has provided for the shuttering of FITF and the department's other initiatives comes from Bondi’s memo, which states that FITF will be closed “to free resources to address more pressing priorities, and end risks of further weaponization and abuse of prosecutorial discretion.” It’s not clear what “abuse of prosecutorial discretion” Bondi might be referencing. Perhaps she’s referring to the prosecutions under FARA and related cases of the Internet Research Agency, Russian spies in the United States, and Russians paying American influencers to spread propaganda—but if so, she doesn’t explain why she believes those prosecutions to have been abusive.

Despite Bondi’s insinuation that foreign interference isn’t a “pressing priority,” there’s no reason to think that these adversarial foreign efforts have ebbed. In late October, the Office of the Director of National Intelligence warned that “foreign actors—particularly Russia, Iran, and China—remain intent on fanning divisive narratives to divide Americans and undermine Americans’ confidence in the U.S. democratic system.” Through indictments, agency actions, and public statements, various agencies alerted the public to efforts to hack into presidential campaigns, shape news coverage around the election, and introduce chaos and confusion into the voting process. While the intelligence community has been relatively quiet since the election, technology companies are continuing to report on what they see. Just last week, Google detailed how China and Iran have been leveraging Google’s AI tool Gemini for influence operations. Now, government programs to counter these efforts have wound down, and the technology companies seem to be largely on their own.

The preexisting systems were not perfect. There are legitimate questions to resolve about to what extent the government should be involved in addressing foreign influence operations—the Twitter Files’ reframing of foreign interference work was successful in part because it tapped into an innate wariness of government intervention on social media. And these government efforts did sometimes make mistakes in identifying purported foreign interference efforts. But these misidentifications were precisely what open channels of communication and constructive working relationships were meant to address, with each stakeholder—the government, tech companies, and outside researchers—serving as a check on the others.

Likewise, it’s true the threat of foreign disinformation campaigns was sometimes exaggerated. Many state actor interference campaigns are poorly run, and when they are disrupted early on, they show little indication of having impact. But that requires that platforms continue to look for them. When platforms were not looking—for example, during the Russian interference cycle of 2015-2017—thousands of explicitly manipulative troll accounts ballooned in followers and engagement. These campaigns are real, they remain an ongoing challenge, and the idea that disrupting them should be solely the purview or responsibility of tech companies is also an alarming thought.

An administration that actually wanted to address these problems would have plenty of options. It could engage in greater transparency efforts or push for legislation to that effect. It could staff the teams working on this issues with new hires preferable to the administration, as it’s done in some many other areas. But the Trump administration has done none of these things. It hasn’t even explained its reasoning.

In understanding the administration’s actions, therefore, we’re left to extrapolate from the history of how this has played over the last decade. And that suggests that Trump has destroyed these programs either because he and the people around him genuinely believe the conspiracy theories, or because the Republican Party has so convinced its base of this narrative that it must now follow through in destroying the government’s capacity to combat foreign interference.

Whichever is the case, U.S. policy toward foreign interference is now being shaped by an entirely fabricated narrative. The infrastructure to combat foreign meddling took years to build, but its destruction has taken mere weeks. The consequences may reverberate for far longer.