Transatlantic Techlash Continues as U.K. and U.S. Lawmakers Release Proposals for Regulation

The 2018 “techlash” shows no sign of slowing. The last week of July saw the release of two papers containing proposals for significant increases regulation of tech companies, particularly with an eye toward protecting the integrity of political processes and elections.

Published by The Lawfare Institute

in Cooperation With

The 2018 “techlash” shows no sign of slowing. The last week of July saw the release of two papers containing proposals for significant increases regulation of tech companies, particularly with an eye toward protecting the integrity of political processes and elections.

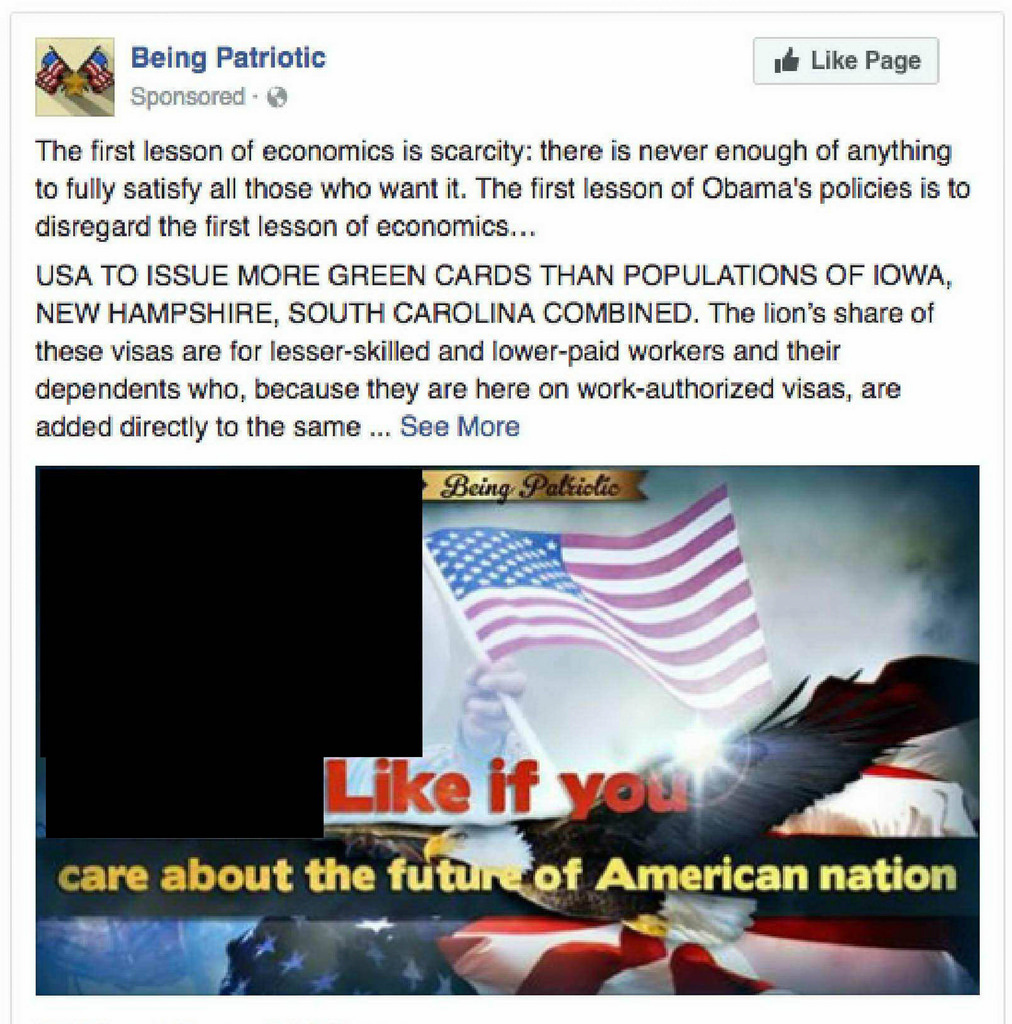

The first was an interim report released by the U.K. House of Commons Digital, Culture, Media and Sport Committee as part of the committee’s ongoing inquiry into “fake news.” The second, prepared by the staff of U.S. Senate Intelligence Committee Vice Chairman Mark Warner, was a policy white paper on “proposals for regulation of social media and technology firms.” Warner’s white paper, published by Axios, responds to what the paper characterizes as the exposure of “the dark underbelly of the entire ecosystem” of digital communications technologies. The U.K. report likewise states that the issues of fake news and relentless targeting of hyper-partisan views online by both Russian state-sponsored actors and private companies have shown that the "the existing legal framework" is outdated and has put democracy "at risk."

Both documents are intended as conversation starting points, rather than final comprehensive proposals for reform. But they are also much more substantive than the theatrics of earlier hearings before these same committees, in which lawmakers displayed deep uncertainty over how to address the unprecedented challenges social media has created for democracy. Now, at least some of these lawmakers have presented concrete ideas that demonstrate a more nuanced understanding of the issues.

Reading the two papers side-by-side is revealing. For almost every one of the 20 discrete regulatory proposals set out in the Warner white paper, the U.K. report has a corresponding recommendation. The considerable overlap between the two documents hints at which reforms are most likely to be priorities in the near future. But the areas of divergence are equally telling, suggesting some fundamental differences in the countries’ perspectives on underlying questions.

Key similarities

Regulation is coming: It’s clear that greater regulation is coming to the internet. In a striking parallel, both documents explicitly refer to the online information ecosystem as the “Wild West” and declare that this state of affairs can no longer continue. Warner’s white paper states that current laws leave social media and technology firms “unmanaged and not accountable to users or broader society.” The paper suggests that legal reforms are necessary to ensure that platforms operate in a way that is socially beneficial. The U.K. report declares that “[w]ithin social media, there is little or no regulation,” citing the the U.K. Information Commissioner’s description of herself as the “sheriff in the wild west of the internet.” While lawmakers’ dissatisfaction with this situation is not new, these documents seek for the first time to further the conversation with specific proposals considering the broader picture and not only a single aspect or problem (such as lack of transparency in political advertising).

There are unique challenges in legally regulating social media: Both documents express that law can be a blunt instrument for fixing challenges posed by new technologies: law is typically a slow-moving response, while tech develops at an unusually fast pace. Warner’s white paper notes that “outdated election laws have failed to keep up with evolving technology” and “the law may be slow to address novel forms of [user manipulation] practices not anticipated by drafters.” The paper therefore calls for regulators to be granted rulemaking authority to ensure that laws keep pace with tech. Similarly, the U.K. report seeks to make “principle-based recommendations which are sufficiently adaptive to deal with fast-moving technological developments.”

The reports agree on the key areas of focus: Warner’s white paper identifies three main target areas for policymakers: disinformation that undermines trust in institutions, consumer protection, and problems for long-term competition and innovation posed by dominance of a few t platforms. The proposals in the U.K. report principally fall into the same three buckets.

Tech companies need to take responsibility for content on their platforms: Politicians have long called for platforms to more extensively police content on their sites, but these documents match such demands with concrete recommendations—focusing particularly on the need for greater transparency around the sourcing of online content. Bots play a key role in the amplification and dissemination of disinformation, the proposals argue, and so they should be clearly marked (according to the U.S. report) and information about them should be provided to researchers rather than just removing these accounts surreptitiously (according to the U.K. report). Both reports propose updates of each respective country’s election laws to include greater disclosure requirements for political advertising online. The reports also argue that tech companies need to determine the origin of accounts—including by addressing the use of VPNs (U.S.) and shell companies (U.K.) to obscure origins—in order to minimize the capacity for foreign actors to use posts and ads to influence democratic processes. But while the Warner white paper notes that this “can be technically challenging,” neither document gives ideas of how tech companies should do implement this proposal.

This responsibility includes legal liability: The U.K. report recommends that the government establish “clear legal liability for the tech companies to act against harmful and illegal content on their platforms.” Legal proceedings could be launched either by a public regulator or by private individuals or organizations harmed by content disseminated on social media, including defamatory content.

More surprisingly, Warner’s white paper also contemplates a revision to the intermediary immunity created by Section 230 of the Communications Decency Act (sometimes called the “Magna Carta” of the internet) to allow platforms to be liable to tort claims if they fail to take down manipulated content. This would be a major change, and the paper accepts that it would be “bound to elicit vigorous opposition.” The fact that the suggestion was even made shows the seriousness with which lawmakers are taking the threat of disinformation.

Nevertheless, because of Section 230 and stringent U.S. attitudes toward freedom of speech, such liability is likely to be more cautiously introduced in the U.S. than in the U.K. Warner’s white paper notes the difficulties in distinguishing between true disinformation and legitimate satire and the need to limit potential for bad-faith reporting by users of content for removal. The U.K. report, on the other hand, speaks admiringly of a recent German law, known as NetzDG, which imposes large fines if tech companies fail to remove content, including hate speech, within 24 hours. The report notes criticisms that such a law could be seen to tamper with free speech, but concludes that “one in six of Facebook’s moderators now works in Germany, which is practical evidence that legislation can work.”

Tech companies need to be more transparent: Warner’s white paper suggests requiring that big platforms grant public interest researchers access to company data so that issues within and misuse of platforms can be better understood. Similarly, the U.K. report expresses frustration with platforms’ opacity, stating, “Facebook has all of the information,” and urges greater transparency requirements so that social media companies are not in a position of “marking their own homework.”

In this vein, both documents contemplate auditing requirements for platforms’ algorithms. Warner’s white paper suggests mandatory standards for algorithms to be auditable for efficacy and fairness, as well as hidden bias. This is one of the most firmly-worded proposals in the paper, which states that “a degree of computational inefficiency seems an acceptable cost to promote greater fairness, auditability, and transparency.” The U.K. report likewise recommends a government body be empowered to conduct “algorithmic auditing,” so that non-financial aspects of tech companies are audited and scrutinized just as the financial aspects are. Such proposals are likely to be well-received by academics and civil society groups, many of which have sought to draw greater attention to algorithmic bias and discrimination.

Users need better protections: Both reports express concern about users’ limited power over their own data and privacy. Warner’s white paper contemplates introducing legislation mirroring the European General Data Protection Regulation—or, if this is “too extreme,” then at least one specific element that requires first party consent for any data collection and use. This would be a marked departure from the much lighter touch that U.S. regulators have taken with tech companies compared with their European counterparts.

But the GDPR seems to have found favor in the U.K. too. The U.K. report recommends that the government close loopholes that might leave people in the U.K. without GDPR protection when the U.K. leaves the EU. Both papers also suggest there needs to be a more substantive understanding of what constitutes user consent to data use, admonishing tech companies for “dark patterns” that are intentionally designed to sway users toward taking actions they would otherwise not take under effective, informed consent (U.S.) such as “small buttons to protect our data and large buttons to share our data” (U.K.).

The rise of a few dominant tech companies poses a threat to competition and therefore users: The issue of competition gets the least attention in both documents. The U.K. report, in particular, does not deal with the issue in much depth, as it is not within the core remit of the fake news inquiry. The report does note its importance, however, saying that the “global dominance of a handful of powerful tech companies” bears heavily on those companies’ behavior and responses to issues. It therefore recommends that the U.K. government’s forthcoming white paper on making the internet and social media safer, due before the end of 2018, must set out measures to protect users and their data—especially given that “in the tech world, consumer detriment is harder to quantify.” The writers of that white paper might want to look at Warner’s white paper for inspiration. The handful of reforms proposed by Warner present a nuanced understanding of the unique aspects how online platforms work and and how users can be empowered and innovation protected.

Governments must devote more resources to these issues: Both documents call for public regulators to be further empowered and provided with greater resources to better police the online information ecosystem. The U.K. report goes a step further by suggesting that the funds for doing so could be raised through a levy on tech companies operating in the U.K. Warner’s white paper calls for the creation of an “Interagency Task Force” to help create a whole-of-government approach to asymmetric attacks against election infrastructure and democratic institutions. The U.K. report similarly calls for a joint working group to ensure that departments collaborate, and also recommends greater information sharing and coordination between the U.S., E.U. and U.K. governments, as well as “parliamentarians around the world.”

Increased digital literacy is necessary to effectively counter disinformation: This is a promising common thread in both documents—long-term democratic resilience requires greater public understanding of the online information ecosystem. Both reports call for public education initiatives to promote media literacy from an early age. The U.K. report underlines how seriously it views this project by stating that “[d]igital literacy should be the fourth pillar of education, alongside reading, writing and maths.” Warner’s white paper recognizes the nuances of the issue. It argues that education initiatives need to focus on cultivating greater awareness about general information weaponization, citing research that shows how empowering individuals to act as fact-checkers and critics may backfire by exacerbating distrust in institutions.

Areas of divergence

These lawmakers see tech companies differently: There is a striking difference in tone in the way the reports describe tech companies’ role in contributing to online threats. Warner’s white paper begins by extolling the innovation and success of the American companies, which, it argues, have created world-changing products and services. The U.K. report, by contrast, repeatedly chides the tech companies (and Facebook in particular) for their obstinance both in acknowledging problems and in providing candid information to the inquiry. This difference is not purely due to the nationality of the companies but reflects the different relationships between tech companies and the two sets of lawmakers bodies.While Facebook CEO Mark Zuckerberg voluntarily testified before Congress for over 10 hours earlier this year, he has repeatedly declined requests to give evidence to the U.K. inquiry. In fact, the U.K. report calls again for Zuckerberg to give evidence in person to answer the many outstanding questions to which it says Facebook has not responded adequately to date. The U.K. report as a whole displays the regulatory challenges of the “globalised nature of social media,” especially in those jurisdictions in which tech companies are not actually based.

There is greater attention in the U.K. to international ramifications: The U.K. report devotes considerable space to discussing the impact of social media in countries all around the world. In particular, it strongly criticizes Facebook for the company’s role in spreading misinformation during ongoing ethnic violence in Myanmar, concluding that

Facebook is releasing a product that is dangerous to consumers and deeply unethical. We urge the Government to demonstrate how seriously it takes Facebook’s apparent collusion in spreading disinformation in Burma, at the earliest opportunity. This is a further example of Facebook failing to take responsibility for the misuse of its platform.

The report also discusses Russian disinformation efforts during the 2017 Catalan independence referendum campaign, as well as the role of SCL Elections (a company related to the now-infamous Cambridge Analytica) in using misinformation, dirty tricks and manipulation of social media to influence elections all around the world. The U.K. report makes clear the committee’s belief that regulators must consider measures to minimize harmful effects around the world. Warner’s white paper, by contrast, does not engage with any of these international or transnational issues. This is a striking contrast.

Different understandings of political speech underpin the reports: Although it is not stated explicitly, the documents express distinct underlying conceptions of how speech should be regulated. Along with its receptivity a NetzDG-like regulation (as mentioned above), the U.K. report contains statements that would sit uneasily with the unique First Amendment culture in the U.S. that is more absolutist in its conceptions of free speech. For example, the U.K. report states that “[a]rguably worse than false information is hyper-partisan views which play to fears and prejudices,” that social media allows to be targeted to receptive groups—but this kind of speech would more likely be seen as a natural (and legally protected) part of political debate in the highly polarized and robust U.S. political media environment. Such fundamental differences will no doubt manifest in the respective regulatory responses developed in the U.S. and U.K.

Conclusion

Lawmakers on both sides of the Atlantic are coming to grips with new threats caused by vulnerabilities in the online information ecosystem, and are now putting pen to paper on concrete proposals for reform. While actual legislation may still be some time away, the detailed and serious nature of these papers suggest tech companies should expect more comprehensive regulation from lawmakers around the world.

.jpg?sfvrsn=d8787e74_5)