What the Omegle Shutdown Means for Section 230

Is the closure of the anonymous videochatting site a victory against online sexual abuse, or a step onto a slippery slope?

Published by The Lawfare Institute

in Cooperation With

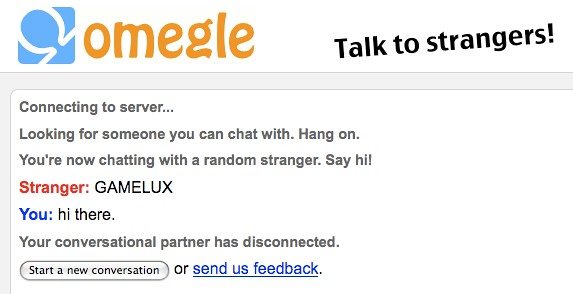

The website’s slogan was simple: “Talk to Strangers!” For 14 years, that was exactly what users found when they visited the chat service Omegle, which paired people randomly with anonymous users for text or video conversations. Some users used the site as a way to connect about shared interests, meet celebrities, or even make friends. Others found themselves plunged into frightening or even dangerous situations—flashed by genitalia, matched with sexual predators, or faced with threats of school shootings.

On Nov. 8, though, users visiting Omegle were met not with the familiar greeting, but with a lengthy, sorrowful goodbye note from the site’s founder, Leif K-Brooks, explaining that he had decided to shut down the service. He hinted vaguely at “attacks” on the platform that had made running the website “no longer sustainable, financially nor psychologically,” concluding sadly, “The battle for Omegle has been lost, but the war against the Internet rages on.”

What K-Brooks’s note left out—at least initially—was the specifics of the “attacks” in question. A hint appeared a week later, when the goodbye note updated to read: “I thank A.M. for opening my eyes to the human cost of Omegle.”

The sentence linked to a court filing in a civil case, A.M. v. Omegle—a lawsuit brought by a sextortion victim who was exploited as a child using Omegle’s platform. According to Carrie Goldberg, an attorney for A.M., the site’s shutdown was a condition of the lawsuit’s settlement. The case, crucially, had proceeded after Omegle failed to convince a district judge it was shielded by Section 230 of the Communications Decency Act, the recently controversial law that protects platforms from most civil liability for third-party content.

The story of Omegle is, in significant part, a story about debates over Section 230’s liability shield, which critics argue allows bad actors online to avoid responsibility for the sometimes horrific behavior that takes place on their platforms. If you take this view, then the shutdown of Omegle looks like a victory.

The problem, though, is that rolling back the statute’s immunity protections also risks encouraging more responsible platforms to shut down wide swaths of harmless speech online, lest they risk the litigation that Omegle faced—the same dynamic that led Congress to pass Section 230 in the first place. One of the key claims in the lawsuit that brought down Omegle, for example, was filed under the Allow States and Victims to Fight Online Sex Trafficking Act (FOSTA), a 2018 carve-out to Section 230’s immunity shield whose poor drafting has pushed many platforms toward overly-aggressive moderation. That censorship has caused demonstrable real-world harms. Though the statute was nominally designed to combat sex trafficking, its critics have argued that FOSTA has accomplished little in the way of helping trafficking victims while placing adults engaged in consensual sex work in danger by leading to the shuttering of online services that sex workers used to remain safe.

Shutting down Omegle, K-Brooks wrote in his farewell note, is comparable to “shutting down Central Park because crime occurs there—or perhaps more provocatively, destroying the universe because it contains evil.” This is an extremely generous reading of the situation, to say the least. But in one sense, K-Brooks is not entirely wrong. There is a real risk that the legal tools used to shut down Omegle might be used to cramp potentially valuable online speech in the future. It’s tremendously difficult to figure out a route by which websites enabling vicious online abuse can be held accountable without also stepping onto a slope that’s already been demonstrated to be treacherously slippery.

The good news for people who care about a free and open internet is that the legal reasoning set out in the Omegle case is relatively constrained. The case is a decent attempt at striking at least somewhat of a balance between holding Omegle responsible and limiting the legal exposure to websites that are genuinely doing their best—or even just websites that aren’t actively doing their worst. But this balance is not entirely stable, and it could still tip in the wrong direction.

K-Brooks launched Omegle in 2009. Upon opening the webpage, users had a choice of whether they would like to text chat, video chat, or both with another anonymous user of the site. They would then be randomly paired with a “stranger,” sometimes connected by a demonstrated shared interest, other times not.

According to Omegle’s terms of service agreement, the website was intended for users over the age of 18. Earlier iterations of the website featured a message that children aged 13 and older were permitted to use the site with parental supervision—though by 2022, the front page read only “18+.” Despite these limitations, the website featured no age verification or user registration requirement and appears to have been used regularly by unsupervised children. Recent reporting has identified unattended children as young as seven video chatting with strangers—most often adults—on the platform.

The chat website initially gained popularity in the earlier days of social media but experienced a significant resurgence during the coronavirus pandemic. According to BBC News, Omegle’s monthly visits exploded from approximately 34 million visits per month in January 2020 to approximately 65 million in January 2021 to 70.6 million in January 2023. The company said at the time that chatting with strangers on the platform was a “great way to meet new friends, even while practicing social distancing.”

Unsurprisingly, the random, anonymous nature of connections on Omegle attracted users looking for sexual experiences online. Given the number of minors also using the website, this created obvious problems. Often, adult users looking for sex were paired randomly with minors—and, unfortunately, some adults also visited the website in search of sexual interactions with children. Countless horrific stories have emerged of sexual abuse of children on the platform. According to BBC News, Omegle has been mentioned in more than 50 cases against pedophiles in recent years, and an organization monitoring the online safety of children had 68,000 reports of “self generated” child sexual abuse materials coming from the website.

K-Brooks wrote in his farewell note that “Omegle punched above its weight in content moderation” and that “Omegle worked with law enforcement agencies, and the National Center for Missing and Exploited Children, to help put evildoers in prison where they belong.” It’s hard to fully know what to make of this. At one point, K-Brooks stated in a court filing that he was the site’s sole employee, suggesting that he hadn’t hired any full-time moderators—though this may not indicate much, as many platforms now rely heavily on contractors for content moderation work.

Yet many tech platforms also cooperate with law enforcement, as K-Brooks described doing, without engaging in much or any proactive content moderation themselves. And the disclaimer that for some time appeared on the site’s front page is somewhat at odds with K-Brooks’s sunny description of his website’s purpose. “Understand that human behavior is fundamentally uncontrollable,” the disclaimer read. It went on: “Use Omegle at your own peril.”

Whatever measures Omegle did or didn’t implement to prevent abuse on its platform, the allegations in A.M. v. Omegle, the lawsuit that led to Omegle’s shutdown, are profoundly ugly. According to the initial complaint and second amended complaint, A.M.—who used a pseudonym to protect her identity—alleged that in 2014, when she was 11 years old, she was paired to chat with an adult man on Omegle. After meeting on Omegle, the man, a Canadian named Ryan Fordyce, convinced A.M. to provide him with her contact information. Over the next three years, the complaints allege, Fordyce sexually abused and exploited A.M. online, forcing her to generate and send child sexual abuse material to him and his friends. According to A.M., Fordyce then trained her to traffic other children on Omegle, forcing her to visit the website “for a set number of hours” to “find other girls who ‘[he] would like,’” obtain their contact information, and then send it back to him. (Fordyce faced criminal charges in Canada after law enforcement uncovered hundreds of sexually explicit images of children, including A.M., in his possession. In 2021, he was sentenced to eight and a half years in prison.)

Typically, Section 230 immunity creates problems for efforts to hold websites civilly liable for circumstances like A.M.’s, however awful. After all, Fordyce’s interactions with A.M. via Omegle were his own, not that of the website itself—meaning that suing Omegle over what Fordyce did would be seeking to hold the platform liable for third-party content. A.M.’s complaints attempted to get around the liability shield through two main routes. First, A.M. argued that her abuse was the direct and “predictable consequence” of Omegle’s “negligent design”—that is, seeking to bring a claim against Omegle on the grounds of the platform’s own design, rather than third-party content. These types of lawsuits on the grounds of product liability have become increasingly common in recent years, though not always successful, as a means of attempting to circumvent Section 230 immunity. And, second, A.M. sought to use the loopholes in Section 230 created by FOSTA to bring claims against the platform.

Twice, Omegle unsuccessfully moved to dismiss the lawsuit, pointing, among other things, to Section 230. The litigation went on for nearly two years, until the case was dismissed on Nov. 2 following Omegle’s settlement agreement with A.M. A week later, the site shut down. A.M. wasn’t the only case that K-Brooks was litigating at the time of the shutdown, either; in another case, M.H. v. Omegle, the parents of a child exploitation victim alleged that—in addition to other infractions—Omegle violated FOSTA in this case by “knowingly receive[ing] value for their ongoing business practices that allow their website to become a means of child exploitation despite the risk to children.” Omegle had successfully won its motion to dismiss, but the plaintiffs had appealed to the U.S. Court of Appeals for the Eleventh Circuit when Omegle shut down.

Understanding the litigation against Omegle requires taking a close look at FOSTA, which represented the first substantive change to Section 230’s liability shield since the statute first became law in 1996. Broadly speaking, it expanded mechanisms for holding platforms both criminally and civilly liable for content related to sex trafficking in both state and federal court. Among other things, it created a new criminal penalty, 18 U.S.C. § 2421A, for “own[ing], manag[ing], or operat[ing]” a platform “with the intent to promote or facilitate … prostitution” and expanded existing anti-sex trafficking law under 18 U.S.C. § 1591 to criminalize “knowingly assisting, supporting, or facilitating” sex trafficking. These changes didn’t directly affect Section 230, which has never extended to federal criminal law—but FOSTA also carved out an exemption from Section 230 immunity for civil lawsuits under § 1595, which creates a civil cause of action for violations of § 1591.

If that makes your head hurt, you’re not alone. The law is, charitably, somewhat of a mess, with a number of different moving parts that interact with one another in confusing and unintuitive ways. To make matters worse, the initial legislation left key questions unanswered, such as what, exactly, it means for a platform to “knowingly assist[], support[], or facilitat[e]” sex trafficking. Once FOSTA became law, a number of tech companies made the decision to simply pull back from conduct that could potentially open them up to litigation—in one case, pulling back from providing infrastructure services to a social network run by sex workers.

Companies faced an incentive to either cease moderating altogether (to avoid gaining knowledge of user activity that could make them culpable under FOSTA) or to over-moderate, pulling down innocuous material alongside potentially illegal content so as to avoid risk. In the five years since FOSTA’s passage, a mounting pile of evidence has indicated that the law has limited all kinds of online speech that has nothing to do with sex trafficking. This crackdown has led to real-world harms—particularly for sex workers who lost access to the platforms they needed to make money safely, pushing some to work in potentially dangerous conditions on the street.

Still, supporters of FOSTA might look at K-Brooks’s note as an example of the statute working exactly as it was intended. Here’s a website that was consistently used by bad actors to harm children, which has now shut down seemingly because of the litigation costs stacked up from fighting FOSTA claims. Perhaps some websites have shuttered that shouldn’t have, they might say, but Omegle’s closure actually shows that FOSTA can do some good.

It’s hard to argue that Omegle was a net force for good in the world. The question, though, is whether the legal toolkit used against Omegle could be used just as easily against other websites that are less harmful, and whether Omegle’s closure will lead cautious platforms to over-moderate to avoid sharing the same fate. That choice can lead to harms, too—albeit in a more quiet way, by limiting the sharing of potentially valuable speech and avenues for self-expression. Is there some kind of Goldilocks standard under which FOSTA could be used against truly noxious platforms without chilling others?

The plaintiffs in A.M. v. Omegle brought a handful of different claims under FOSTA, two of which District Judge Michael Mosman of the U.S. District Court for the District of Oregon dismissed in a July 2022 ruling. The first of those claims, under the new civil cause of action created alongside § 2421A, Mosman tossed out because the text of the statute does not apply retroactively—possibly thanks to the law’s poor drafting. The second claim sought to use FOSTA’s Section 230 carve-out to bring a civil claim against Omegle under state law. Mosman dismissed this as well, on the grounds that the carve-out is explicitly limited to federal civil actions under 18 U.S.C. § 1595 and certain state criminal charges.

The third claim, though—seeking a civil remedy under § 1595—the judge ultimately allowed to move forward. In terms of understanding FOSTA’s impact, it’s here that A.M. v. Omegle becomes important—and complicated. The reason why has to do with the mens rea standard for § 1595 claims. And the reason for that has to do, again, with FOSTA’s confusing drafting.

FOSTA amends Section 230 to exempt from the statute’s immunity “any claim in a civil action brought under section 1595 of title 18, if the conduct underlying the claim constitutes a violation of section 1591 of that title.” The difficulty here is that § 1595 and § 1591 have different mens rea standards. Whereas claims under § 1595 require only that the plaintiff show that the defendant “knew or should have known” that it was in some way benefiting from sex trafficking, § 1591 requires actual knowledge of trafficking on the part of the defendant—a more stringent standard. Given that FOSTA establishes a Section 230 exemption for § 1595 claims “if the conduct underlying the claim constitutes a violation of section 1591” (emphasis added), do plaintiffs bringing those claims need to meet only the lower § 1595 standard, or the higher § 1591 standard?

This question matters a great deal both for plaintiffs seeking to bring FOSTA claims—who would benefit from a lower standard—and for websites worried about being tied up in litigation, which would rather have a higher, more defendant-friendly standard for civil claims. Over the past several years, a range of district courts have struggled to interpret the statutory language—which is not exactly a model of clarity.

In his July 2022 order denying Omegle’s motion to dismiss, Judge Mosman took the view that FOSTA “imports the higher mens rea from § 1591 into § 1595,” dismissing the plaintiffs’ claim as not meeting that higher bar but allowing them the opportunity to re-plead. They swiftly filed a second amended complaint alleging that Omegle “knowingly introduces children to predators causing children to be victims of sex acts.” Before the judge could rule on Omegle’s revised motion to dismiss this second complaint, though, the U.S. Court of Appeals for the Ninth Circuit weighed in on the mens rea question in Does 1-6 v. Reddit, ruling that the higher § 1591 standard is required for § 1595 claims under FOSTA. With further guidance from the appellate court, Mosman went on to apply the § 1591 standard in evaluating A.M.’s second amended complaint.

The Ninth Circuit held that liability under § 1591 requires that a company “must have actually ‘engaged in some aspect of the sex trafficking’” itself and that simply “turn[ing] a blind eye to the unlawful content” appearing on the platform isn’t enough. But the facts as alleged by A.M., Judge Mosman writes, are worse than that: “Plaintiff successfully contends that Defendant did more than ‘tum a blind eye to the source of their revenue’ …. Defendant’s entire business model, according to Plaintiff, is based on this source of revenue.” (Recall that, at the motion-to-dismiss stage, the court must accept as true the allegations in the complaint.)

In Does 1-6, the plaintiffs alleged that Reddit “provides a platform where it is easy to share child pornography, highlights subreddits that feature child pornography to sell advertising on those pages, allows users who share child pornography to serve as subreddit moderators, and fails to remove child pornography even when users report it”—which was not enough, in the Ninth Circuit’s view, to strip Section 230 immunity under FOSTA. In A.M., in contrast, Judge Mosman understands the link between child sexual exploitation and “the very structure of the platform” to be far tighter. The entire design of Omegle, in his view—and the platform’s practice of accepting advertising revenue from advertisers who choose to place ads because of the site’s sexual content—adds up to potential liability not shielded by Section 230.

As Danielle Citron and Lawfare Editor-in-Chief Benjamin Wittes wrote in 2017, “Omegle is not exactly a social media site for sexual predators, but it is fair to say that a social network designed for the benefit of the predator community would look a lot like Omegle.”

So far, this is a cautiously optimistic story about a decent application of a sloppy law. Among the many difficulties with FOSTA was that its tangled language made it very difficult to interpret, causing tech companies to hang back from activity they otherwise would have engaged in or allowed. But the Ninth Circuit’s ruling in Does 1-6 establishes precedent for a higher scienter standard that would seem to shield more responsible platforms, or even platforms that are just less actively malicious. A liability standard that protects Reddit but not Omegle from FOSTA claims seems like a decent way of distinguishing between the baby and the bathwater.

Recently, the D.C. Circuit also endorsed the Ninth Circuit’s reading of FOSTA in its July 2023 ruling in Woodhull Freedom Foundation v. United States, which upheld FOSTA against a range of constitutional challenges—but, in doing so, further reined in the statute. The appeals court held that language in FOSTA prohibiting “knowingly assisting, supporting, or facilitating” sex trafficking, or “own[ing], manag[ing], or operat[ing]” a website “with the intent to promote or facilitate … prostitution,” requires intent to aid and abet those unlawful actions. This reasoning focuses on a different portion of the statute than the Ninth Circuit’s ruling in Does 1-6 but similarly raises the bar for liability. That’s a relief for platforms worried about potential litigation.

But there’s a catch—at least as far as A.M. v. Omegle goes. Aided in part by some convoluted reasoning in Does 1-6, the district court ends up taking the view that Omegle didn’t just knowingly benefit from sex trafficking under § 1591(a)(2)—as the plaintiffs alleged in the Reddit case—but actually engaged in sex trafficking itself under § 1591(a)(1). This subsection of the statute requires that the defendant “knowingly” recruit “by any means” a person, while knowing or in reckless disregard of the fact that the person in question is under 18 and “will be caused to engaged in a commercial sex act.” Judge Mosman takes the view that Omegle’s marketing (“Talk to Strangers!”) constitutes knowing recruitment. But what about the link to commerce? Here, Mosman points to Omegle’s ad revenue. “Omegle receives compensation from advertisers,” he writes, “while at the same time engaging in a series of business practices that it recklessly disregards, or knows, will result in minors being exposed to sexual harm.”

In other words, for the platform to have engaged in and benefited from sex trafficking, the platform doesn’t have to be paid by the predator in question for access to the victim—as the judge acknowledges is “typical of a standard sex trafficking case.” There doesn’t even have to be money changing hands elsewhere on the platform, with Omegle’s knowledge. No money needs to change hands other than that spent by the advertisers. At times Judge Mosman seems to suggest that it’s significant that some advertisers may have chosen to spend money on Omegle because of the unsavory activity that took place there, but it’s not clear how much of a role this plays in his reasoning.

This definition of sex trafficking seems quite broad. It’s modulated somewhat by the relatively high mens rea requirement, combining actual knowledge and reckless disregard—and, taking the facts of the second amended complaint as alleged, it really does seem like Omegle knew what was happening on its platform. So a more responsible website like Reddit wouldn’t necessarily need to be concerned. And, of course, Does 1-6 is binding law in the Ninth Circuit, whereas Judge Mosman’s opinion, as a district court ruling, is not precedential. Still, this might open up another avenue of ambiguity for platforms concerned about potential liability under FOSTA.

We’ve focused here on FOSTA, but A.M. v. Omegle is also about the success of the product liability approach to getting around Section 230—the “negligent design” argument that we mentioned briefly earlier. “Omegle could have satisfied its alleged obligation to Plaintiff by designing its product differently—for example, by designing a product so that it did not match minors and adults,” Judge Mosman explained in denying Omegle’s motion to dismiss this batch of claims. “Plaintiff is not claiming that Omegle needed to review, edit, or withdraw any third-party content to meet this obligation.” Here, the plaintiffs benefit from the Ninth Circuit’s 2021 ruling in Lemmon v. Snap, holding that Snapchat was not protected by Section 230 against claims that the company had been negligent in designing a functionality that allowed users to record their real-life speed, which led to tragedy when two teenagers died in a car accident when speeding at over 100 miles per hour.

As with FOSTA’s Section 230 carve-out, the success of the product liability approach to platform litigation is a relatively new development—and, perhaps, a sign of the shifting legal and political landscape around Section 230, as sentiment sours on Silicon Valley. Shortly after the settlement in A.M. v. Omegle, the U.S. District Court for the Central District of California pointed to both Lemmon and A.M. in denying a motion to dismiss on Section 230 grounds by a number of technology companies battling product liability claims.

K-Brooks’s farewell note positions Omegle’s death as a casualty of a changing internet—a place that is less chaotic and more supervised than the online world of 2009, when K-Brooks launched the site. The slow vanishing of that world goes hand in hand with changing views of Section 230, as the tech industry has matured and American society and government have grown less willing to accept the sometimes playful and sometimes horrific disorder of the internet. Some of this change has itself created real loss—as with the disappearance of spaces used by sex workers to gather online.

If Omegle was a holdover from an older, wilder internet, though, it’s a holdover that shouldn’t have stuck around. “There are a lot of sites from Web 2.0 that I’ll miss—Flickr, LiveJournal, old Twitter, Geocities, even Tumblr’s heyday,” wrote Katie Notopoulos in Business Insider, reflecting on the site’s closure. “But Omegle isn’t one to be sad about.” It’s worth noting, too, that unlike many of the instances of censorship that took place in the months immediately after FOSTA, Omegle’s closure seems less likely to have the damaging side effects that many of the post-FOSTA website shutterings brought about. “I don’t think there were many sex workers on there,” Kate D’Adamo, an advocate for sex workers’ rights and partner at the organization Reframe Health and Justice, told us over email. With that in mind, there’s much less of a downside to Omegle’s shuttering.

Following Omegle’s announcement that it would be closing its doors, one-time users flocked to Reddit to reminisce about the good, the bad, and the very ugly of their times on the website. A surprising number of commenters wrote that they or their friends had met spouses on the platform. Others shared memories that ranged from the disturbing to the obscene. For all the cheerful insouciance of the Reddit thread, though, the underlying darkness of what sometimes happened on Omegle was never far away. “After reading some of these comments,” one user wrote, “it sounds like it should’ve shut down a long time ago.”

.jpg?sfvrsn=8588c21_5)