What’s Working and What Isn’t in Researching Influence Operations?

The field has certainly grown apace producing countless case studies highlighting examples of influence operations. Yet in many other ways the field has hit a rut.

Published by The Lawfare Institute

in Cooperation With

The world first learned about Russian attempts to manipulate the information environment in 2013. Staff hired by the Internet Research Agency (IRA) posed as Americans online posting divisive comments to social media. Continued activities during the 2016 U.S. presidential election put influence operations on center stage globally. It’s been eight years since the initial IRA operation, but how far has the field working to understand and counter influence operations come in that time? The field has certainly grown apace producing countless case studies highlighting examples of influence operations. Yet in many other ways the field has hit a rut. Scholars of influence operations still quibble over establishing common definitions and frequently come up empty when seeking to access more social media data.

Influence operations are not a new phenomenon, although the concept still has not been well defined. As part of influence operations, actors engage in a variety of activities aimed at affecting an audience or situation for a specific aim. Such activities can include tactics such as disinformation; for example, Chinese officials have repeatedly attempted to dispute the origins of the novel coronavirus through a coordinated campaign involving diplomats, state broadcasters and social media. But influence operations aren’t just limited to intentional spreading of misleading information. It’s a term that can also encompass knowing when to put an emotive message in front of an audience to encourage a behavior change, as Operation Christmas aimed to in demobilizing FARC guerrillas; agenda-setting particularly in mainstream media to frame a topic, as both Greenpeace and Shell did in encouraging audiences, especially policymakers, to adopt their side; and mobilizing audiences to participate by taking up and spreading a message.

The emergence of a field devoted to researching, and countering, influence operations is something I have watched closely. In 2014, I channeled a fascination with propaganda from the two world wars into researching how the phenomena was changing in a digital age. In those early days, there were few places to find work researching influence operations. The career paths were mostly in academia or in the military or intelligence services. Marrying the two, I chose to pursue a doctorate in war studies. Along the way, I have worked with tech companies, militaries, civil society groups, and governments, learning how each understands and works to counter (and sometimes run) influence operations.

The field has come a long way since I got started in 2014. But certain pathologies still remain entrenched and hamper effective dialogue and cooperation between key stakeholders. A lack of universal definitions about the problem often means that meetings are spent level-setting, rather than problem-solving. Growing distrust of industry hinders research collaboration with social media companies, for fear of attacks on one’s credibility. For those who do have access to data, the arrangement is usually ad hoc, part of an unbalanced exchange between a company and the researchers, as exemplified in the recent debacle between researchers at New York University and Facebook. To add to that, there has been little headway on regulation that outlines a framework for transparency reporting by industry on their operations, or rules for facilitating data-sharing, and what comes down the pipe often leaves it to working groups to sort out in detail.

There have been at least three major changes in the field of researching and countering influence operations since 2016.

First, there is far more interest in this subject now than before 2016. This interest tends to be event driven and led by media coverage about influence operations. Before 2016, much of the influence operations research focus was on violent extremism, as terrorist groups such as the Islamic State dominated news coverage and leveraged social media to grow their membership bases. Events continue to drive interest in influence operations, as well as changes in countering them—none more than Trump’s surprise victory in the 2016 election. More recently, the coronavirus pandemic pushed social media platforms to increase the number of publicly disclosed changes to the design and functionality of products, making 66 interventions in 2020, and provided governments with the renewed impetus to introduce new legislation to counter influence operations.

The second major change, as a result of this growing interest, is increased funding and job opportunities. For example, the U.S. Department of Defense recently announced a nearly $1 billion deal to counter misinformation (without sharing much about what that entails). Such investments have led some observers to dub the field the “disinformation industrial complex.” These growths in public interest and funding have helped support new initiatives and research on influence operations. For example, some 60 percent of academic studies measuring the effects of influence operations have been published since 2016. The field working on influence operations is eclectic, with people coming from a variety of academic disciplines and sectors, driving initiatives around fact-checking, media literacy, academic research, investigations, policymaking, and development of tools for potentially countering influence operations.

Third, with this increased capacity for investigating influence operations, pockets of collaboration have emerged to help detect examples of campaigns. This has resulted in ad hoc collaboration between external investigators and social media platforms where the influence operations increasingly occur, but also issue-specific initiatives, such as the Election Integrity Partnership, which was stood up before the 2020 U.S. presidential election as “a coalition of research entities focused on supporting real-time information exchange between the research community, election officials, government agencies, civil society organizations, and social media platforms.” These cross-sector collaborations are important; however, they are often event driven and operate for a short period of time, making it difficult to develop longer-term capacity. Moreover, as most funding in this space is project based, fewer investments are made into initiatives that can bring together the disparate, and sometimes competitive, stakeholders required to address influence operations, including from academia, civil society, governments, industry, and media, to name a few.

A central problem is that stakeholders aren’t even using the same vocabulary to describe the problems and solutions. Many different terms are commonly used, sometimes interchangeably, including propaganda, fake news, information operations, hybrid warfare and manipulative interference. Indeed, in the Partnership to Counter Influence Operation’s first community survey, “one-third of respondents … cited the lack of agreed-upon definitions as the most or second-most important challenge in the field.” But the gap is wider than terminology.

Beyond not speaking the same language, stakeholders often have a weak understanding of how each other works. For example, policy proposals to regulate social media platforms often lack insights into the tech companies they aim to govern, offering little detail or guidance for implementation, leaving it to industry to figure out. And similar gaps crop up in other domains. Academic research on influence operations is aimed at getting published, not policy development, and often does not translate for policymakers in either industry or government how the research might be useful. For outsiders attempting to engage tech companies, navigating the firms’ byzantine organizational structures alone can be challenging, and the polished, preapproved company talking points can come across as shallow. To put it another way, the field researching and work on countering influence operations lack glue to hold them together.

The field also lacks standards for conducting investigations of influence operations.

Around half of the initiatives researching and countering influence operations are led by civil society, through nonprofit organizations like think tanks and nongovernmental organizations, their work falling outside of the academic peer-reviewed process. Even in academia, the vast array of disciplines brought to bear in researching influence operations can make finding universal standards for reviewing the quality of outputs challenging. Good research is based on reliable data and methodologies, both of which require shared standards.

Despite issues in discerning good-quality research on influence operations from bad, there is a low bar for being picked up in media coverage. Influence operations make for good headlines and lots of clicks, regardless of the quality of the research. This has led to all sorts of issues, such as making faulty attribution of who is behind an influence operation (and no, it’s not always Russians) and mistaking correlation for causation. Misattribution can have consequences, particularly when individuals are blamed falsely. And just because two outcomes happen at the same time—troll farms and a surprise election victory, for example—does not automatically mean that one caused the other. In an election, like in many other contexts, many factors go into voters’ decisions. Is it possible to isolate and measure the influence of any single medium? Are voting choices influenced more by parents, peer groups or the economy?

The fact is, researchers’ understanding of influence operations beyond case studies showcasing examples of campaigns is weak. Little is known about how the information environment works as a system. Most research on influence operations focuses on specific campaign activity often in isolation from the audience it targets or the ecosystem in which it spreads. Put another way, researchers know little about the flow of information between different types of online platforms, through influencers, and into the media.

Knowledge about the effects of influence operations also remains limited. Most research on the effects of influence operations focuses on traditional mass media. Where social media is the focus of measuring the effects of influence operations, most work explores short-term effects only. Little is known about the longer-term effects of influence operations conducted over social media. How does engagement with digital media change user beliefs or views over time? Does repeated exposure to anti-vaccination influence operations make people more or less likely to get inoculated, and how long do those views last? Are there other impacts from such exposure, like a reduced trust in media or government, and what do those potential shifts mean for civic engagement, such as voting or compromising with others who hold different views?

Even less is known about the efficacy of common countermeasures. Most research assessing the impact of interventions focuses on fact-checking, content labeling, and disclosures made by social media companies to those users potentially exposed to influence operations. Results are promising for fact-checking as an immediate countermeasure, reducing the impact of false information on beliefs as well as subjects’ tendency to share disinformation with others. However, very few researchers have studied interventions by social media companies, such as deplatforming, algorithmic downranking or content moderation. While social media platforms have introduced new policies and experimented with interventions to counter influence operations, there is little publicly available information on whether those measures work—in fact, one count from early 2021 found that platforms provided efficacy measurements for interventions in only 8 percent of cases.

Despite these snags, this emergent field has come far. But the road ahead is long and not without bumps.

Stable funding and more coordination between stakeholders are required to counter influence operations. The field is fragile and fragmented. Funding tends to be project based, meaning most initiatives lack resources to fund ongoing operations. Likewise, the need to show results and success to donors encourages initiatives to overinflate their effectiveness as they seek continued support for projects. While the disparate community of researchers increasingly connects through professional convenings, collaboration between experts is still ad hoc. For example, experts don’t tend to build on each other’s work, as demonstrated by a lack of citations between them in drafting similar policy recommendations. Between sectors there are significant disconnects, with academics, civil society, industry and government often operating within their own communities, diminishing their ability to understand each other and their respective roles. Moreover, in some sectors there is a lack of coordination when it comes to influence operations. Nowhere is this more apparent than within the U.S. federal government: Who in government is even responsible for understanding not just countering efforts but also how the country engages in influence operations?

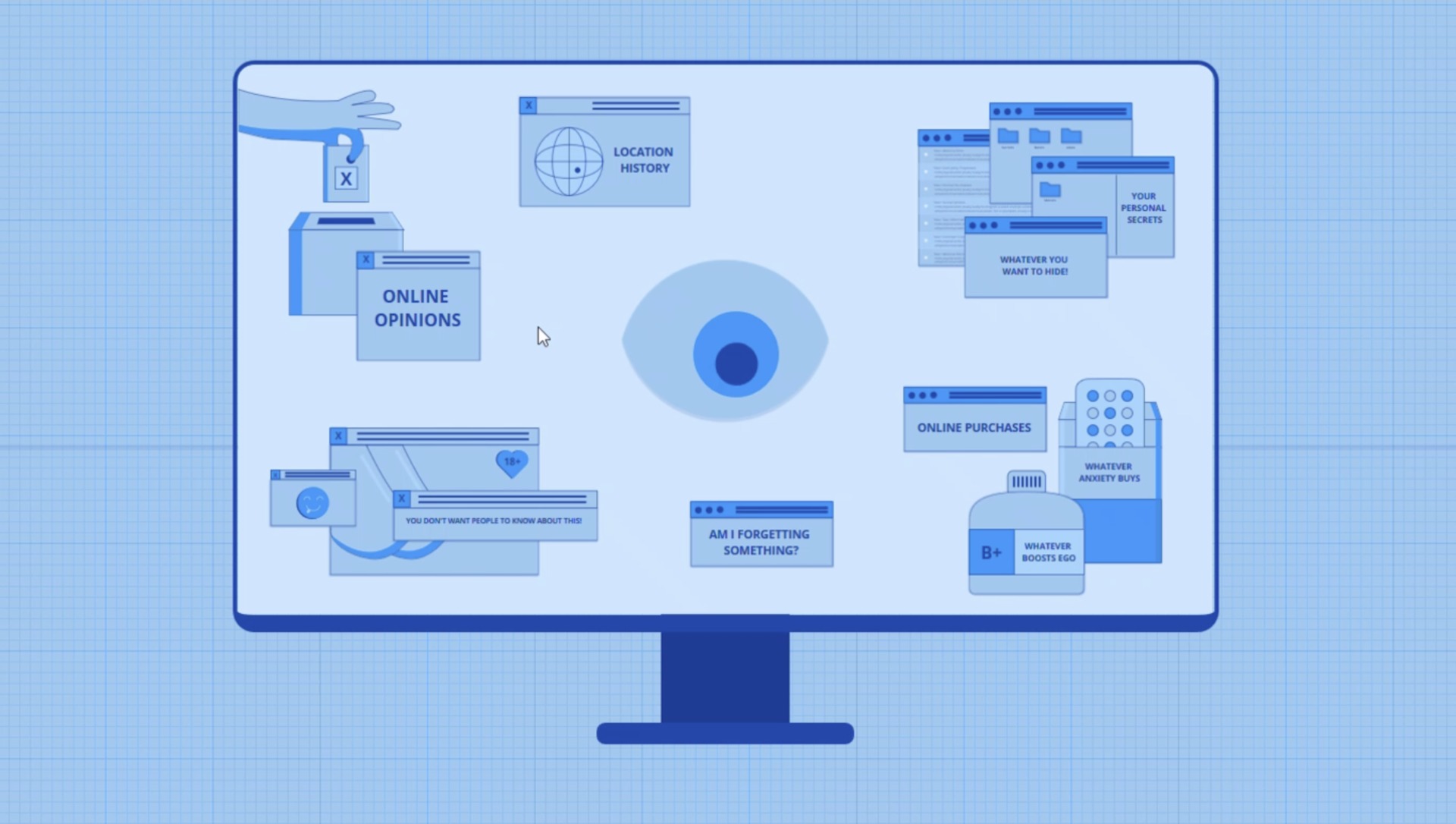

For key stakeholders to change, incentive structures also need to change. Most major actors who can play a big role in countering influence operations have little incentive to do so. Indeed, most are incentivized to do nothing or, worse, to engage in influence operations themselves. Politicians have the capacity to set rules around the use of influence operations—but also benefit from them. And those who do try to introduce legislative changes quickly discover how challenging it can be to draft legislation when there are definitional issues in simply articulating the problem, as was the case in Sen. Klobuchar’s Health Misinformation Act. Social media platforms make billions in revenue off connecting people and using the data generated to enable advertisers to target them. On the content generation side of the problem, business models don’t just color industry decision-making; media (mainstream and alternative alike) are in a competition for audiences and advertising revenue. Click-bait headlines and persuasive ad tech are all part of the news media practice to grow audiences and attract advertisers to target them. Often overlooked is the role of audiences, both in wanting to consume salacious and misleading material, but also in propagating it. Researchers have to stop admiring the problem and, instead, look more closely at what drives engagement with influence operations, how the phenomenon occurs within a wider information ecosystem, and how the information environment can be improved.

But it’s not just a need for funding in the short term to stand up groups to take on these problems. What’s really needed are strategic, longer-term investments into researching influence operations. Indeed, much of what needs to be done now is the unsexy legwork to ensure data-sharing and research collaboration can occur at a level that addresses the gaps outlined above.

These investments should start with articulating a framework for comprehensive transparency reporting and compelling companies to participate. Most researchers have little idea what data is aggregated by and available from industry. Transparency reporting (regularly issued statements by social media companies, disclosing aggregated statistics related to usage of the platforms, requests for data access, how the platform works, policy development and enforcement, and external requests for interventions) could help researchers understand what data might be available for research purposes. A basic first step would be to create a comprehensive transparency reporting framework, complete with prioritization for rolling out different aspects and identifying who should have access to what types of reporting. Such reporting might encompass user-level reporting (how people are engaging on the platform); data access requests (who is asking for what kinds of information and the processes for how those requests are handled); platform-level reporting (how the system works and decision-making around that); policy and enforcement (how rules are created and acted against over time); requests for interventions (who is asking for content to be taken down and the process behind that); and third-party use of the platform (who has access to data and ad tech tools and how those data and tools are being used).

In tandem, rules for data-sharing must also be developed. It’s clear that ad hoc relationships are insufficient to foster deeper insights into influence operations, but sharing data tied to individuals is fraught with challenges. Who should have access to what types of data, and for what purposes? How will the data be transferred and stored? How will the users who created this data be protected? How will abuses be handled? Who will oversee this process? These questions all need to be answered, ideally not by the companies holding the data, but as a society, through an open process. Fortunately, there are also models to draw from—society has managed the exchange of sensitive data for transparency and research purposes before in the health and finance sectors.

Ideally, both transparency reporting and data-sharing rules would be developed at an international level. As countries introduce regulation on social media, companies could face hundreds of variations on requirements around transparency reporting, data-sharing and interventions. While each country is different, a broad transparency reporting framework and data-sharing rules could be universal. Taking a joint approach would also increase the likelihood of companies meeting such requirements should they be introduced. This is particularly important for smaller countries that might not have the clout to make industry jump but could more readily benefit from a collective move that is much too big to ignore.

Finally, with reporting and rules guiding the process, longer-term research collaboration must also be facilitated. A permanent mechanism is needed for sharing data and managing collaborations that ensures the independence of researchers. Given the costs associated with and expertise required to conduct measurements research, an international model whereby several governments, philanthropists, and companies contribute to an institution or mechanism could be the boost this field needs.

Even if data-sharing challenges could be solved, the fact that academic research is seldom designed with policy development in mind means that critical learnings are not being translated and used systematically to support evidence-based policy development. Moreover, conducting measurements related to influence operations, especially over time, requires the cooperation of the platform studied, if for no other reason than to keep the research team informed of changes made to it that could affect the research. A new institution to enable effective collaboration across industry, academia and government is badly needed. One model is a multi-stakeholder research development center, which would enable cross-sector collaboration with an emphasis on facilitating information-sharing to test hypotheses and design countermeasures and intervention strategies. Another follows the European Organization for Nuclear Research, or CERN, model, supported by several countries working with multiple research organizations to create an international network, thus fostering a wider field. An international approach would also help address the imbalance in available research that skews toward the Global North, thus helping those in countries where malign influence operations are a matter of life and death. Another benefit in an international model is that it bakes in a fail-safe should any single member country slide into autocracy, ensuring that such a center cannot be abused.

Such a center would provide an independent space for collaboration between social media platforms and external researchers, with an eye toward integrating a policy focus into the research design, outputs and recommendations made. The pace and proliferation of influence operations outstrips the research and policy community’s ability to study, understand, and find good solutions to the problems presented by the new age of digital propaganda. It’s time to fix this.

Carnegie’s Partnership for Countering Influence Operations is grateful for funding provided by the William and Flora Hewlett Foundation, Craig Newmark Philanthropies, Facebook, Google, Twitter, and WhatsApp. The PCIO is wholly and solely responsible for the contents of its products, written or otherwise. We welcome conversations with new donors. All donations are subject to Carnegie’s donor policy review. We do not allow donors prior approval of drafts, influence on selection of project participants, or any influence over the findings and recommendations of work they may support.

.jpg?sfvrsn=e915b36f_5)